Spark (PySpark) DataFrameWriter class provides functions to save data into data file systems and tables in a data catalog (for example Hive). We can use save or saveAsTable (Spark - Save DataFrame to Hive Table) methods to do that. There is also one function named insertInto that can be used to insert the content of the DataFrame into the specified table. The schema of the DataFrame needs to match with the table schema. This article shows you how to insert records into Hive transactional and non-transactional tables.

Prerequisites

If you don't have a Hive instance to work on, you can install one by following these articles:

Insert into Hive tables

The following code snippet insert two new records into a table named test_db.test_table in Hive.

from pyspark.sql import SparkSession

app_name = "PySpark Insert Into Hive Tables"

master = "local"

spark = SparkSession.builder \

.appName(app_name) \

.master(master) \

.enableHiveSupport() \

.getOrCreate()

spark.sparkContext.setLogLevel("WARN")

# Create a DataFrame

df = spark.sql("select * from test_db.test_table")

df.show()

# Create a new DataFrame

new_data = [[4, 'd'], [5, 'e']]

df_new = spark.createDataFrame(new_data, ['id', 'attr'])

df_new.show()

# Insert into Hive table

df_new.write.insertInto('test_db.test_table', overwrite=False)

Output:

+---+----+

| id|attr|

+---+----+

| 1| a|

| 2| b|

| 3| c|

+---+----+

+---+----+

| id|attr|

+---+----+

| 4| d|

| 5| e|

+---+----+

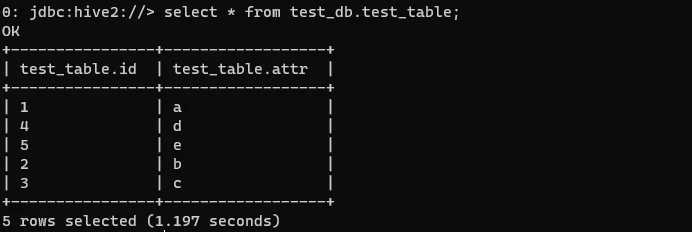

Method insertInto is directly used. Once the script is executed, the table will have two new records in Hive:

Insert into Hive transactional tables

In article Hive ACID Inserts, Updates and Deletes with ORC, I talks about how Hive transaction tables work with the support of Apache Orc file format.

Unfortunately transactional Hive tables are not supported in Spark right now. [SPARK-15348] Hive ACID - ASF JIRA.