Issue

After finishing installation Hadoop 3.0.0 in my Windows: Install Hadoop 3.0.0 in Windows (Single Node), I got the following error after I formated the name node several times.

The following error is thrown out when I tried to start Hadoop HDFS.

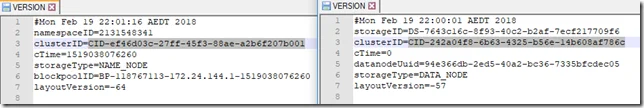

2018-02-19 22:02:06,848 WARN common.Storage: Failed to add storage directory [DISK]file:/F:/DataAnalytics/dfs/data java.io.IOException: Incompatible clusterIDs in F:\DataAnalytics\dfs\data: namenode clusterID = CID-ef46d03c-27ff-45f3-88ae-a2b6f207b001; datanode clusterID = CID-242a04f8-6b63-4325-b56e-14b608af786c at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:722) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadStorageDirectory(DataStorage.java:286) at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadDataStorage(DataStorage.java:399) at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:379) at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:544) at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1690) at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1650) at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:376) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:280) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:816) at java.lang.Thread.run(Thread.java:748) 2018-02-19 22:02:06,851 ERROR datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid 94e366db-2ed5-40a2-bc36-7335bfcdec05) service to /0.0.0.0:19000. Exiting. java.io.IOException: All specified directories have failed to load.

Root cause

This issue happened because the cluster id in my hadoop name node and data node VERSION files are not consistent.

The root cause is: I formated the namenode using the following command without deleting the files in data node directory:

hadoop namenode -format

Resolution

Before formarting name node, ensure the files under **<dfs.data.dir>/**directories are deleted on all data nodes.