In one of my previous post, I showed how to configure Apache Hive 3.0.0 in Windows 10.

Apache Hive 3.0.0 Installation on Windows 10 Step by Step Guide

I didn’t configure Hive metastore thus by default Hive will use embedded mode for metastore. The metadata is stored in Apache Derby database.

However only one user can be active at a time thus won’t be suitable for scenarios that require multiple sessions. In this post, I’m going to show you how to configure a SQL Server database as a remote metastore for HiveServer2.

Prerequisites

Please configure Hive 3.0.0 first by following the above post.

Create a metastore database in SQL Server

Run the following commands to create a database and also login and user for Hive:

create database hive_metastore; go create login hive with password='hive'; go use hive_metastore go create user hive for login hive; go alter role db_owner add member hive; go

The above script will also add user hive into role db_owner.

Download SQL Server JDBC driver

The binary doesn’t include JDBC driver for SQL Server. Thus we need to install it ourselves.

Download SQL Server JDBC driver from Microsoft website:

Copy the suitable JAR file (mssql-jdbc-*.jre8.jar) into $HIVE_HOME/lib folder.

For Ubuntu or other Linux users, use the following command to download:

wget https://github.com/microsoft/mssql-jdbc/releases/download/v7.2.2/mssql-jdbc-7.2.2.jre8.jarcp mssql-jdbc-7.2.2.jre8.jar $HIVE_HOME/lib

* Replace the URL to your own download URL.

*auth folder is only required if you want to use Windows Authentication in Windows systems.

Configure Hive file hive-site.xml

Edit hive-default.xml file (or create one if not existing) in folder $HIVE_HOME/conf.

* You can create the configuration file by using template file hive-default.xml.template.

cp $HIVE_HOME/conf/hive-default.xml.template $HIVE_HOME/conf/hive-site.xml

The above command needs to run in Cygwin terminal.

We need configure the following properties accordingly.

- javax.jdo.option.ConnectionDriverName

- javax.jdo.option.ConnectionURL

- javax.jdo.option.ConnectionUserName

- javax.jdo.option.ConnectionPassword

- hive.metastore.uris

- hive.metastore.db.type

For my environment, they are configured as the following:

<property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.microsoft.sqlserver.jdbc.SQLServerDriver</value> <description>Driver class name for a JDBC metastore</description> </property><property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:sqlserver://localhost:1433;DatabaseName=hive_metastore;</value> <description> JDBC connect string for a JDBC metastore. To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL. For example, jdbc:postgresql://myhost/db?ssl=true for postgres database. </description> </property><property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> <description>Username to use against metastore database</description> </property><property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive</value> <description>password to use against metastore database</description> </property><property> <name>hive.metastore.uris</name> <value>thrift://127.0.0.1:9083</value> <description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description> </property><property> <name>hive.metastore.db.type</name> <value>mssql</value> <description> Expects one of [derby, oracle, mysql, mssql, postgres]. Type of database used by the metastore. Information schema & JDBCStorageHandler depend on it. </description> </property>

Initialize database structure

Run the following command in Cygwin:

$HIVE_HOME/bin/schematool -dbType mssql -initSchema

If your code runs successfully, the following output will be generated:

Metastore connection URL: jdbc:sqlserver://localhost:1433;DatabaseName=hive_metastore; Metastore Connection Driver : com.microsoft.sqlserver.jdbc.SQLServerDriver Metastore connection User: hive Starting metastore schema initialization to 3.0.0 Initialization script hive-schema-3.0.0.mssql.sqlInitialization script completed schemaTool completed

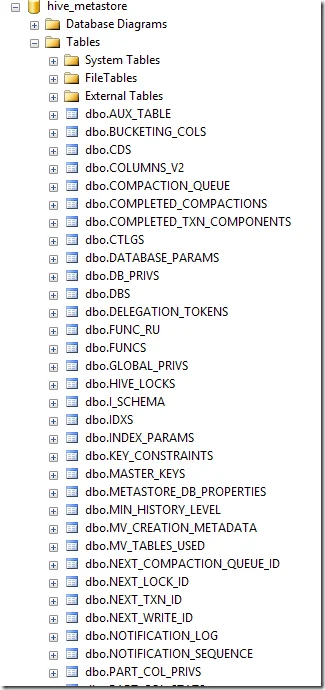

You can also verify the schema in database:

Start Hive metastore service

$HIVE_HOME/bin/hive --service metastore

And then you can use either beeline or hive CLI to interact with Hive databases:

$HIVE_HOME/bin/beeline -u jdbc:hive2://

Start HiveServer2 service

$HIVE_HOME/bin/hive --service hiveserver2 start

Have fun!