Spark is a robust framework with logging implemented in all modules. Sometimes it might get too verbose to show all the INFO logs. This article shows you how to hide those INFO logs in the console output.

Spark logging level

Log level can be setup using function pyspark.SparkContext.setLogLevel.

The definition of this function is available here:

def setLogLevel(self, logLevel):

"""

Control our logLevel. This overrides any user-defined log settings.

Valid log levels include: ALL, DEBUG, ERROR, FATAL, INFO, OFF, TRACE, WARN

"""

self._jsc.setLogLevel(logLevel)

Set log level to WARN

The following code sets the log level to WARN.

from pyspark.sql import SparkSession

appName = "Spark - Setting Log Level"

master = "local"

# Create Spark session

spark = SparkSession.builder \

.appName(appName) \

.master(master) \

.getOrCreate()

spark.sparkContext.setLogLevel("WARN")

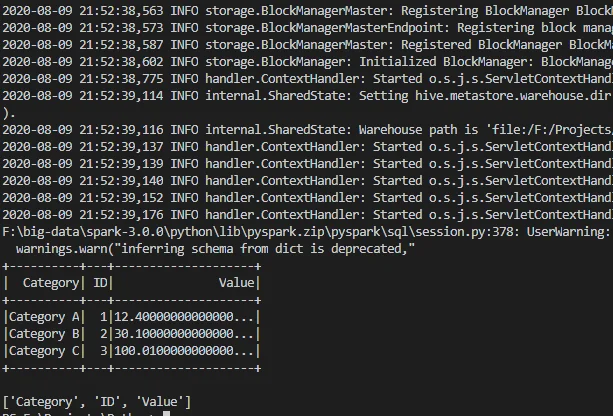

When running the script with some actions, the console still prints out INFO logs before setLogLevelfunction is called.

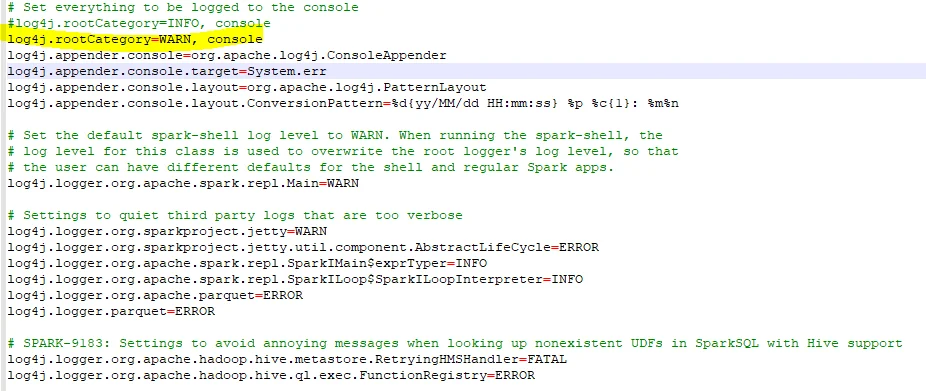

Change Spark logging config file

Follow these steps to configure system level logging (need access to Spark conf folder):

- Navigate to Spark home folder.

- Go to sub folder conf for all configuration files.

- Create log4j.properties file from template file log4j.properties.template.

- Edit file log4j.properties to change default logging to WARN:

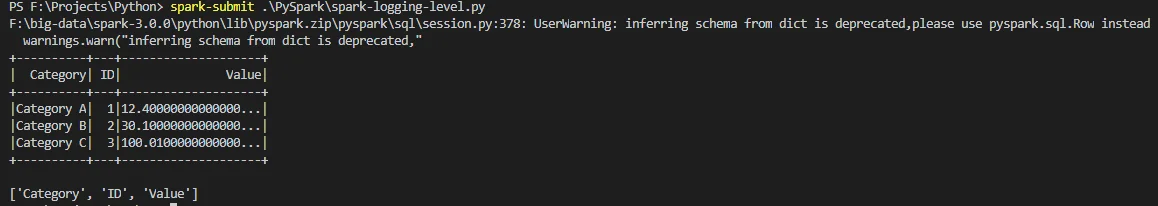

Run the application again and the output is very clean as the following screenshot shows:

For Scala

The above system level Spark configuration will apply to all programming languages supported by Spark incl. Scala.

If you want to change log type via programming way, try the following code in Scala:

spark = SparkSession.builder.getOrCreate()

spark.sparkContext.setLogLevel("WARN")

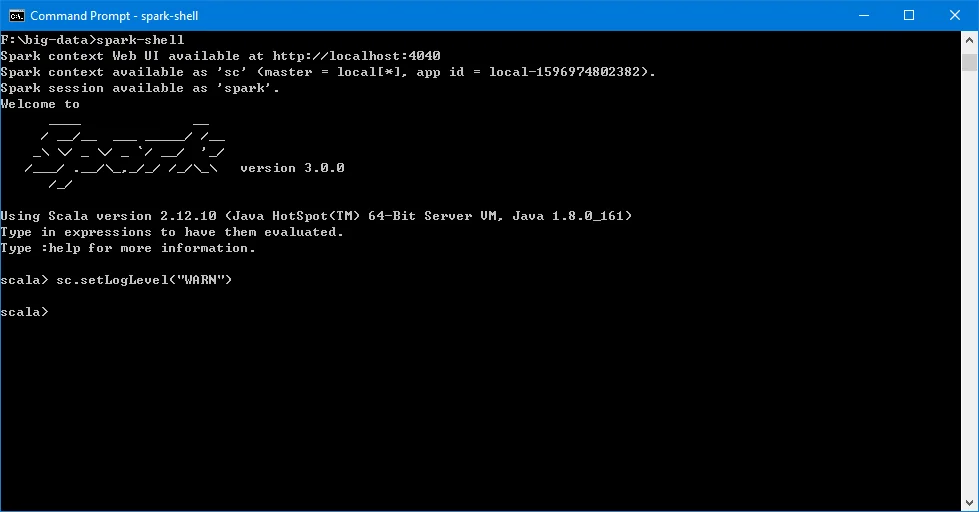

If you use Spark shell, you can directly access SparkContext via sc:

sc.setLogLevel("WARN")

Run Spark code

You can easily run Spark code on your Windows or UNIX-alike (Linux, MacOS) systems. Follow these articles to setup your Spark environment if you don't have one yet: