Issue context

When running PySpark 2.4.8 script in Python 3.8 environment with Anaconda, the following issue occurs: TypeError: an integer is required (got type bytes).

The environment is created using the following code:

conda create --name pyspark2.4.8 python=3.8.0

pip install pyspark==2.4.8

The PySpark script has the following content:

from pyspark.sql import SparkSession

from pyspark.sql.functions import to_date

appName = "PySpark Example - Spark 2.x Date Example"

master = "local"

# Create Spark session

spark = SparkSession.builder \

.appName(appName) \

.master(master) \

.getOrCreate()

data = [{'id': 1, 'dt': '1200-01-01'}, {'id': 2, 'dt': '2022-06-19'}]

df = spark.createDataFrame(data)

df = df.withColumn('dt', to_date(df['dt']))

print(df.schema)

df.show()

# Write to HDFS

df.write.format('parquet').mode('overwrite').save('/test')

Fix this issue

The above issue occurred because Spark 2.4.x doesn't work with Python 3.8+ environment at this time. Thus, to fix the issue we just need to downgrade Python version to 3.7.

For example, if you use Anaconda, the following command can be used to create the Python environment:

conda create --name pyspark2.4.8 python=3.7.0

pip install pyspark==2.4.8

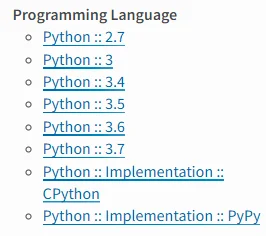

For different versions of PySpark, you can find the supported Python version on PyPi index. For instance, version 2.4.8 supported languages are list on the following page: pyspark · PyPI. The supported programming language is listed on the left side bar of the page.

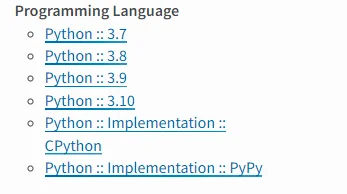

For PySpark 3.3.0, the supported programming language list is updated to the following list: