Spark SQL provides spark.read.text('file_path') to read from a single text file or a directory of files as Spark DataFrame. This article shows you how to read Apache common log files.

Read options

The following options can be used when reading from log text files.

- wholetext- The default value is

false. When it is set to true, Spark will read each file from input path(s) as a single row. - lineSep - The default value is \r, \r\n, \n (for reading) and \n (for writing). This option defines the line separator that should be used for reading. It can also be used when writing DataFrame to text files.

Log files

This article will use Apache common logs as input. The text format is listed below:

%h %l %u %t "%r" %>s %b "%{Referer}i" "%{User-agent}i"

For more details about Apache HTTP Server logs, refer to Log Files - Apache HTTP Server Version 2.4.

This format will generate logs like the following:

127.0.0.1 - kontext [10/Jun/2022:13:55:36 +1000] "GET /logo.png HTTP/1.0" 200 2326 "http://app.kontext.tech/start.html" "Mozilla/4.08 [en] (Win98; I ;Nav)" 127.0.0.1 - kontext [10/Jun/2022:14:55:36 +1000] "GET /index HTTP/1.0" 200 2326 "http://app.kontext.tech/column" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36 Edg/103.0.1264.49" 127.0.0.1 - kontext [10/Jun/2022:15:55:36 +1000] "GET /forum HTTP/1.0" 200 2326 "http://app.kontext.tech/column" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36 Edg/103.0.1264.49"

We can use regular expressions to parse the log files in Python. The content of the above logs will be saved as a local file named apachelogs.txt which will be used later.

Parse log text using regular expressions

To simplify it, I will just use a Python library apachelogs(apachelogs · PyPI) to do this. This package can be installed using pip:

pip install apachelogs

We can easily parse the Apache access logs using this package. The following is one example:

from apachelogs import LogParser

log_text = """127.0.0.1 - kontext [10/Jun/2022:13:55:36 +1000] "GET /logo.png HTTP/1.0" 200 2326 "http://app.kontext.tech/start.html" "Mozilla/4.08 [en] (Win98; I ;Nav)"

"""

log_format = "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\""

parser = LogParser(log_format)

log = parser.parse(log_text)

print('Log text: ')

print(log_text)

print('Parsed log: ')

print(log.remote_host)

print(log.request_time)

print(log.request_line)

print(log.final_status)

print(log.bytes_sent)

print(log.headers_in["User-Agent"])

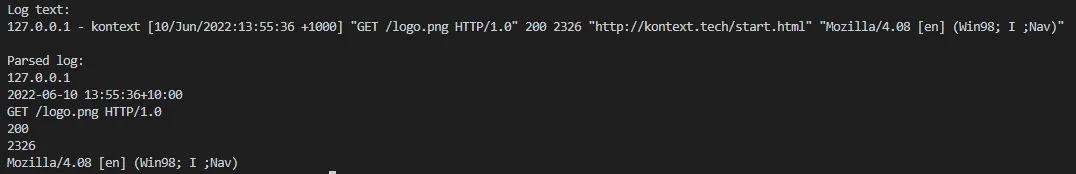

The output looks like the following screenshot:

Now let's input this with PySpark.

Read Apache access logs in PySpark

The high-level steps to read Apache access logs in PySpark are:

- Read each line in each log files as rows.

- Parse each row based on log format. For this case, we will directly use the previously installed package (apachelogs).

Now, let's create a PySpark script (read-apache-logs.py) with the following content:

from pyspark.sql import SparkSession

from apachelogs import LogParser

from pyspark.sql.functions import udf

from pyspark.sql.types import StructType, StructField, StringType, IntegerType, LongType, TimestampType

appName = "PySpark Example - Read Apache Access Logs"

master = "local"

# Create Spark session

spark = SparkSession.builder \

.appName(appName) \

.master(master) \

.getOrCreate()

spark.sparkContext.setLogLevel("WARN")

# Create DF by reading from log files

df_raw = spark.read.text(

'file:///home/kontext/pyspark-examples/data/apachelogs.txt')

print(df_raw.schema)

df_raw.show()

# Define UDF to parse it.

def read_log(log_text):

"""

Function to parse log

"""

log_format = "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\""

parser = LogParser(log_format)

log = parser.parse(log_text)

return (log.remote_host,

log.request_time,

log.request_line,

log.final_status,

log.bytes_sent,

log.headers_in["User-Agent"])

# Schema

log_schema = StructType([

StructField("remote_host", StringType(), False),

StructField("request_time", TimestampType(), False),

StructField("request_line", StringType(), False),

StructField("final_status", IntegerType(), False),

StructField("bytes_sent", LongType(), False),

StructField("user_agent", StringType(), False)

])

# Define a UDF

udf_read_log = udf(read_log, log_schema)

# Now use UDF to transform Spark DataFrame

df = df_raw.withColumn('log', udf_read_log(df_raw['value']))

df = df.withColumnRenamed('value', 'raw_log')

df = df.select("raw_log", 'log.*')

print(df.schema)

df.show()

In the script, we define a Python UDF to parse the log. Within this user defined function, we parse each line of raw log to a StructType and then we flatten the StructType.

Run the script using the following command line:

spark-submit read-apache-logs.py

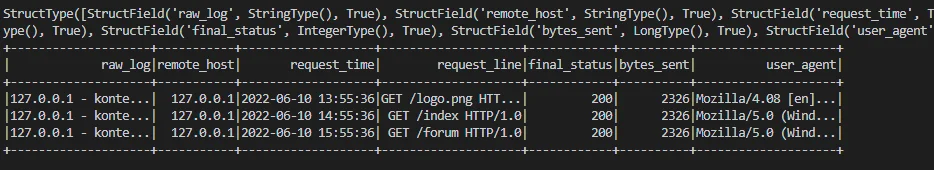

The output looks like the following:

StructType([StructField('value', StringType(), True)])+--------------------+| value|+--------------------+|127.0.0.1 - konte...||127.0.0.1 - konte...||127.0.0.1 - konte...|+--------------------+StructType([StructField('raw_log', StringType(), True), StructField('remote_host', StringType(), True), StructField('request_time', TimestampType(), True), StructField('request_line', StringType(), True), StructField('final_status', IntegerType(), True), StructField('bytes_sent', LongType(), True), StructField('user_agent', StringType(), True)])+--------------------+-----------+-------------------+--------------------+------------+----------+--------------------+| raw_log|remote_host| request_time| request_line|final_status|bytes_sent| user_agent|+--------------------+-----------+-------------------+--------------------+------------+----------+--------------------+|127.0.0.1 - konte...| 127.0.0.1|2022-06-10 13:55:36|GET /logo.png HTT...| 200| 2326|Mozilla/4.08 [en]...||127.0.0.1 - konte...| 127.0.0.1|2022-06-10 14:55:36| GET /index HTTP/1.0| 200| 2326|Mozilla/5.0 (Wind...||127.0.0.1 - konte...| 127.0.0.1|2022-06-10 15:55:36| GET /forum HTTP/1.0| 200| 2326|Mozilla/5.0 (Wind...|+--------------------+-----------+-------------------+--------------------+------------+----------+--------------------

Please make sure your Spark cluster has the dependent Python packages installed.