This tutorial shows you how to use Ollama Python library to connect to Ollama REST APIs to generate SQL statements from text.

About Ollama

Ollama is a fascinating project that allows you to run large language models (LLMs**)** locally on your machine. You can choose from a variety of models within Ollama: library (ollama.com).

Install Ollama

You can download Ollama installer from Ollama website. For Windows users, you may encounter an error - could not connect to ollama app, is it running. Follow the page below to resolve it:

Ollama: Error: could not connect to ollama app, is it running? (app.kontext.tech)

Run a LLM

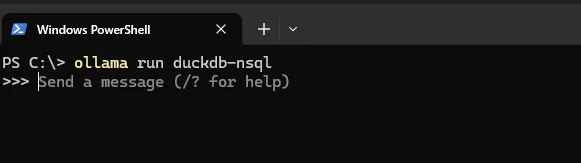

There are a few open source models that can be used to convert text to SQL statements. This tutorial uses model duckdb-nsql.

Run the following command to pull and run the model once Ollama client is installed:

ollama run duckdb-nsql

Please keep in mind that running the larger models (e.g., 7B, 13B, or 33B) requires sufficient RAM—at least 8 GB for 7B models, 16 GB for 13B models, and 32 GB for 33B models.

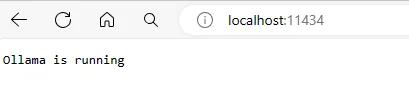

Once the model is downloaded, you should be able to open the following URL in browser:

localhost:11434

The result looks like the following screenshot:

This endpoint will be used in the following sections.

Now let's run some demo in the command line interface:

This endpoint will be used in the following sections.

Now let's run some demo in the command line interface:

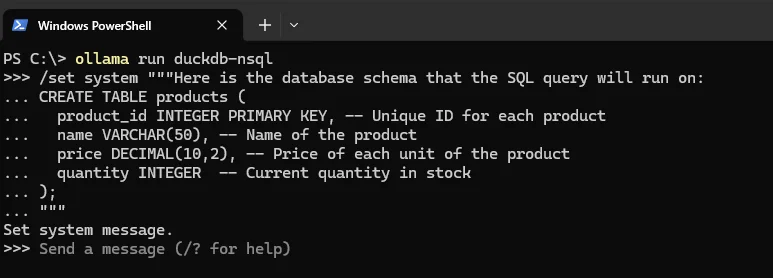

Input the following message:

Input the following message:

/set system """Here is the database schema that the SQL query will run on:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

"""

The above command sets a system message:

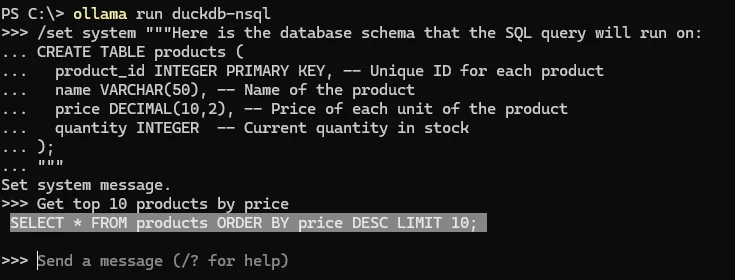

Now input the following message to generate a SQL statement:

Now input the following message to generate a SQL statement:

Get top 10 products by price

The model generates the following output as expected:

SELECT * FROM products ORDER BY price DESC LIMIT 10;

Use Ollama Python library

Now let's implement the same using Python.

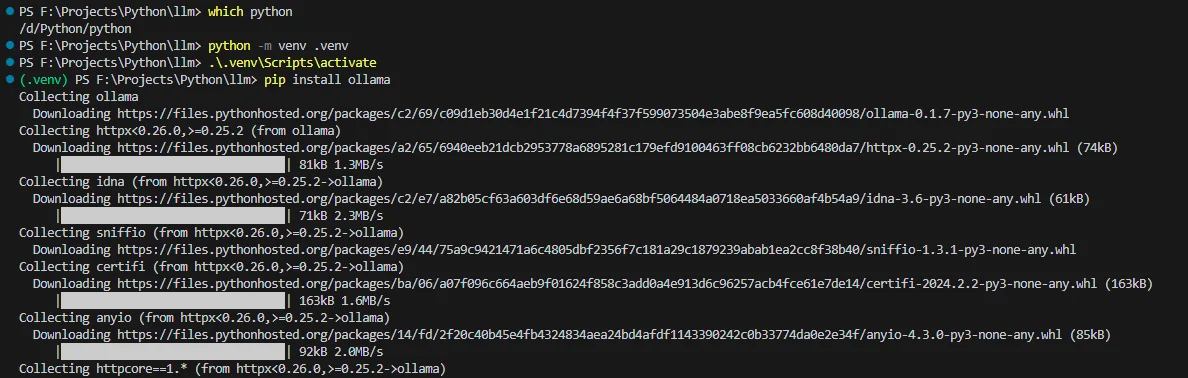

Install Python library

Create a Python project with your favorite tool. Install the package using the following command:

pip install ollama

Use the library

Now we can use the installed library to convert text to SQL. Create a Python script with the following content:

from ollama import Client

endpoint = 'http://localhost:11434'

model = 'duckdb-nsql'

client = Client(host=endpoint)

response = client.chat(model=model, messages=[

{

'role': 'system',

'content': """Here is the database schema that the SQL query will run on:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

""",

},

])

print(response)

response = client.chat(model=model, messages=[

{

'role': 'user',

'content': 'Get top 10 products by price',

},

])

print(response)

# Print out the SQL statement directly.

print(response['message']['content'])

Run the script

Run the Python script, you will get the following out:

{'model': 'duckdb-nsql', 'created_at': '2024-03-24T10:26:12.2886171Z', 'message': {'role': 'assistant', 'content': ' SELECT product_id, name, price, quantity\nFROM products;'}, 'done': True, 'total_duration': 1620373400, 'load_duration': 1149000, 'prompt_eval_count': 109, 'prompt_eval_duration': 617590000, 'eval_count': 15, 'eval_duration': 1000338000}

{'model': 'duckdb-nsql', 'created_at': '2024-03-24T10:26:13.8730534Z', 'message': {'role': 'assistant', 'content': ' SELECT product_name, price FROM products ORDER BY price DESC LIMIT 10;'}, 'done': True, 'total_duration': 1582343200, 'load_duration': 1113500, 'prompt_eval_count': 32, 'prompt_eval_duration': 393627000, 'eval_count': 18, 'eval_duration': 1186633000}

SELECT product_name, price FROM products ORDER BY price DESC LIMIT 10;

The last output is the SQL statement.

Note - the SQL statement generate here is slightly different from the CLI output.

More information

Hopefully you get a gist of how to run open source LLMs locally in your computer to develop applications with Python. Have fun! For more examples, refer to https://github.com/ollama/ollama-python.