This article shows you how to filter NULL/None values from a Spark data frame using Python. Function DataFrame.filter or DataFrame.where can be used to filter out null values. Function filteris alias name for wherefunction.

Code snippet

Let's first construct a data frame with None values in some column.

from pyspark.sql import SparkSession

from decimal import Decimal

appName = "Spark - Filter rows with null values"

master = "local"

# Create Spark session

spark = SparkSession.builder \

.appName(appName) \

.master(master) \

.getOrCreate()

spark.sparkContext.setLogLevel("WARN")

# List

data = [{"Category": 'Category A', "ID": 1, "Value": Decimal(12.40)},

{"Category": 'Category B', "ID": 2, "Value": Decimal(30.10)},

{"Category": 'Category C', "ID": 3, "Value": None},

{"Category": 'Category D', "ID": 4, "Value": Decimal(1.0)},

]

# Create data frame

df = spark.createDataFrame(data)

df.show()

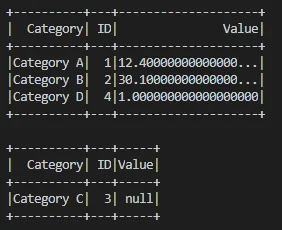

The content of the data frame looks like this:

+----------+---+--------------------+

| Category| ID| Value|

+----------+---+--------------------+

|Category A| 1|12.40000000000000...|

|Category B| 2|30.10000000000000...|

|Category C| 3| null|

|Category D| 4|1.000000000000000000|

+----------+---+--------------------+

Filter using SQL expression

The following code filter columns using SQL:

df.filter("Value is not null").show()

df.where("Value is null").show()

Standard ANSI-SQL expressionsIS NOT NULL and IS NULL are used.

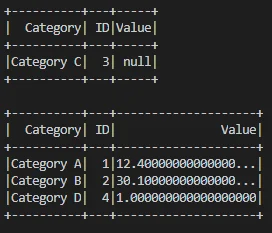

Output:

Filter using column

df.filter(df['Value'].isNull()).show()

df.where(df.Value.isNotNull()).show()

The above code snippet pass in a type.BooleanType Column object to the filteror wherefunction. If there is a boolean column existing in the data frame, you can directly pass it in as condition.

Output:

Run Spark code

You can easily run Spark code on your Windows or UNIX-alike (Linux, MacOS) systems. Follow these articles to setup your Spark environment if you don't have one yet: