When submitting a Spark job, the application can run in a Spark stand-alone cluster, a Mesos cluster, a Hadoop YARN cluster or a Kubernetes cluster. Once a job is deployed and running, we can kill it if required. For example, to kill a job that is hang for a very long time.

This article provides steps to kill Spark jobs submitted to a YARN cluster.

Kill Spark application in YARN

To kill a Spark application running in a YARN cluster, we need to first find out the Spark application ID.

There are several ways to find out.

spark-submit logs

If the application is submitted using spark-submit command, you can find out from the logs:

21/03/08 20:55:55 INFO Client: Submitting application application_1615196178979_0001 to ResourceManager

21/03/08 20:55:55 INFO YarnClientImpl: Submitted application application_1615196178979_0001

21/03/08 20:55:56 INFO Client: Application report for application_1615196178979_0001 (state: ACCEPTED)

21/03/08 20:55:56 INFO Client:

client token: N/A

diagnostics: [Mon Mar 08 20:55:56 +1100 2021] Scheduler has assigned a container for AM, waiting for AM container to be launched

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1615197355546

final status: UNDEFINED

tracking URL: http://***:8088/proxy/application_1615196178979_0001/

user: fahao

21/03/08 20:55:57 INFO Client: Application report for application_1615196178979_0001 (state: ACCEPTED)

21/03/08 20:55:58 INFO Client: Application report for application_1615196178979_0001 (state: ACCEPTED)

21/03/08 20:55:59 INFO Client: Application report for application_1615196178979_0001 (state: ACCEPTED)

YARN UI or CLI

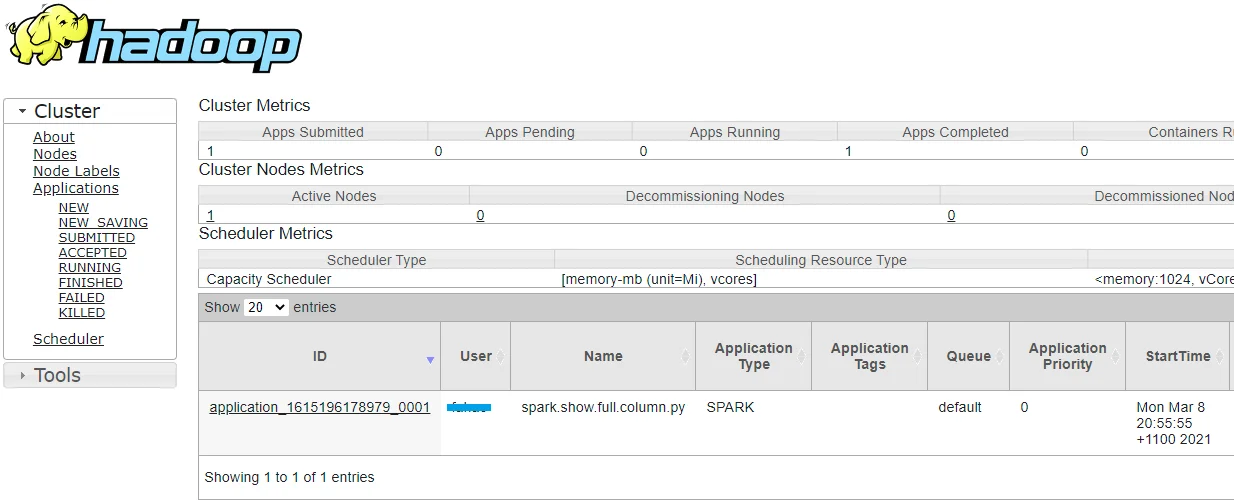

Through YARN web UI, you can find out all the applications:

YARN CLI can also be used to list all the running applications:

yarn application -list

Kill the application

Once we find out the application ID, we can kill it using the command line:

yarn application -kill application_1615196178979_0001

*Replace the application with your own application ID.

The details about listing and killing YARN applications are documented here: List and kill jobs in Shell / Hadoop.

You can also usespark-submit command to kill the application too.