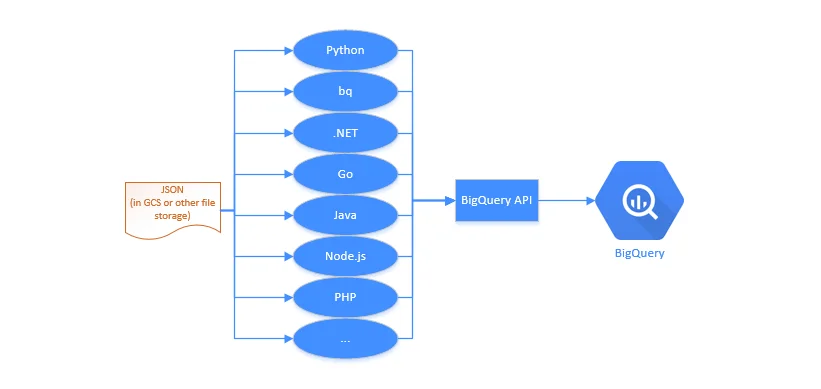

Google Cloud BigQuery provides APIs that can be accessed by all the mainstream programming languages. It also provides SDKs/packages that can be directly accessed in your applications to load JSON file into BigQuery, regardless of whether the file is stored on Google Cloud Storage or in a temporary location that your program has access to.

Overview

Thanks to the rich packages provided by Google, there are many ways to load a JSON file into BigQuery:

- Python (incl. Pandas)

- bq CLI

- .NET

- Go

- Java

- Node.js

- PHP

- and all other programming languages that can call a REST API.

This article provides high-level steps to load JSON line file from GCS to BigQuery using Python client.

infoFor simplicity, the Python script used in this article is run in Cloud Shell (Google Cloud Terminal) environment.

Initialize client credential

Follow these steps to setup client credential.

Follow article Quickstart: Using client libraries | BigQuery | Google Cloud to configure a service account and download client JSON credential file. You don't necessarily assign project owner to the service account. For this tutorial, you only need to assign read access to GCS and read and write access to BigQuery (bigquery.tables.create, bigquery.tables.updateData, bigquery.jobs.create). For simplicity (not best practice), I am adding BigQuery Admin and Storage Admin role to my service account.

Upload the client credential file to Cloud Shell.

Run the following command to edit bash profile:

vi ~/.bashrcAdd the following line to it:

export GOOGLE_APPLICATION_CREDENTIALS="PATH_TO_THE_UPLOADED_CREDENTIAL_FILE.json"Remember to update the credential file path to your own. This is important as the credential file will be used directly by the Python client functions though we can also explicitly specify the credential file path.

Activate the service account in Cloud Shell so that we can verify the access:

gcloud auth activate-service-account service_account_name@project_name.iam.gserviceaccount.com --key-file PATH_TO_THE_UPLOADED_CREDENTIAL_FILE.jsonRemember to update the service account and key file to your own ones.

Run the following command to test access to Cloud Storage:

gsutil lsThe command should display all the bucket list.

Run the following bq command to test access to BigQuery:

bq ls

warning The commands used above doesn't include all the permissions we need to run this tutorial.

Add a JSON line file into GCS

Let's upload a JSON line file to GCS. For more information about BQ supported JSON line file, refer to JSON Lines.

For simplicity, let's just create a file named json_lines.jsonlin a Google Cloud Storage bucket with the following content:

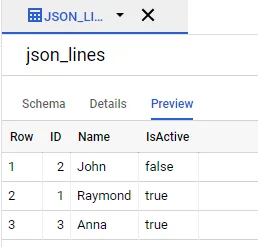

{'ID': 1, 'Name': 'Raymond', 'IsActive': true}

{'ID': 2, 'Name': 'John', 'IsActive': false}

{'ID': 3, 'Name': 'Anna', 'IsActive':true}

Remember to use UNIX EOL (LF) instead of Windows one (CRLF).

infoEnsure the region are the same for the bucket and BigQuery.

Create a Python script to load the file

Create a Python script file (gcs-to-bq.py) with the following content in Cloud Shell:

from google.cloud import bigquery

# Construct a BigQuery client object.

client = bigquery.Client()

table_id = "YOUR_PROJECT_NAME.test.json_lines"

job_config = bigquery.LoadJobConfig(

schema=[

bigquery.SchemaField("ID", "INT64"),

bigquery.SchemaField("Name", "STRING"),

bigquery.SchemaField("IsActive", "BOOL")

],

source_format=bigquery.SourceFormat.NEWLINE_DELIMITED_JSON,

)

uri = "gs://YOUR_BUCKET_NAME/json_lines.jsonl"

load_job = client.load_table_from_uri(

uri,

table_id,

location="australia-southeast1", # Must match the destination dataset location.

job_config=job_config,

) # Make an API request.

load_job.result() # Waits for the job to complete.

destination_table = client.get_table(table_id)

print("Loaded {} rows.".format(destination_table.num_rows))

The code snippet is from the official tutorial of Google Cloud BigQuery documentation with a few changes. Please remember to update the following parts:

- Replace BigQuery destination table name.

- Replace GCS object URL to your own one.

- Replace destination dataset location accordingly.

Run the script

You can then run the script in Cloud Shell using the following command:

python gcs-to-bq.py

The output looks like the following:

Loaded 3 rows.

Verify the result in BigQuery

Go to your BigQuery project and you will find a new table named json_linesis created successfully.

Resolve one error

When the script was executed firstly, I encountered one error:

google.api_core.exceptions.BadRequest: 400 Error while reading data, error message: JSON table encountered too many errors, giving up. Rows: 1; errors: 1. Please look into the errors[] collection for more details.

By looking into details, the error happened because my previous JSON line file is not valid. After fixing the format issues in the file, the load is completed successfully.

References

Loading JSON data from Cloud Storage | BigQuery | Google Cloud