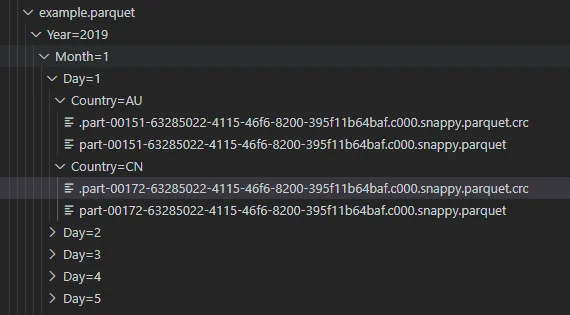

Spark supports partition discovery to read data that is stored in partitioned directories. For the structure shown in the following screenshot, partition metadata is usually stored in systems like Hive and then Spark can utilize the metadata to read data properly; alternatively, Spark can also automatically discover the partition information.

Read parquet files from partitioned directories

In article Data Partitioning Functions in Spark (PySpark) Deep Dive, I showed how to create a directory structure like the following screenshot:

To read the data, we can simply use the following script:

from pyspark.sql import SparkSession

appName = "PySpark Parquet Example"master = "local"# Create Spark sessionspark = SparkSession.builder \ .appName(appName) \ .master(master) \ .getOrCreate()

# Read parquet filesdf = spark.read.parquet( "file:///F:\Projects\Python\PySpark\data\example.parquet\Year=*\Month=2\Day=*\Country=AU")

print(df.schema)df.show()

Submit the script, the following texts will print out:

- Schema

StructType(List(StructField(Date,DateType,true),StructField(Amount,IntegerType,true)))

- DataFrame

+----------+------+| Date|Amount|+----------+------+|2019-02-01| 41||2019-02-10| 50||2019-02-11| 51||2019-02-12| 52||2019-02-13| 53||2019-02-14| 54||2019-02-15| 55||2019-02-16| 56||2019-02-17| 57||2019-02-18| 58||2019-02-19| 59||2019-02-02| 42||2019-02-03| 43||2019-02-04| 44||2019-02-05| 45||2019-02-06| 46||2019-02-07| 47||2019-02-08| 48||2019-02-09| 49|+----------+------+

The above script reads from local file system thus there is no Hive metadata used (i.e. no dependency on Hadoop/Hive). Spark can automatically discover the partitions.

Read files from nested folder

The above example reads from a directory with partition sub folders. From Spark 3.0, one DataFrameReaderoption ***recursiveFileLookup***is introduced, which is used to recursively load files in nested folders and it disables partition inferring. It is not enabled by default.

To read all the parquet files in the above structure, we just need to set option recursiveFileLookupas 'true'.:

from pyspark.sql import SparkSession

appName = "PySpark Parquet Example"master = "local"

# Create Spark sessionspark = SparkSession.builder \ .appName(appName) \ .master(master) \ .getOrCreate()

# Read parquet filesdf = spark.read.option("recursiveFileLookup", "true").parquet("file:///F:\Projects\Python\PySpark\data2")print(df.schema)df.show()

infoFor versions lower than Spark 3.0, you can read each folder or parquet file as DataFrame and then union them together.