In this article, I am going to show you how to use JDBC Kerberos authentication to connect to SQL Server sources in Spark (PySpark). I will use Kerberos connection with principal names and password directly that requires Microsoft JDBC Driver 6.2 or above. The sample code can run on Windows, Linux and Mac-OS platforms.

If you want to work with lower version of Microsft JDBC driver, I already explained in details about the different approaches to authenticate with SQL Server database in my previous articles. Refer to the following links for more information:

Connect to SQL Server in Spark (PySpark) - Kontext

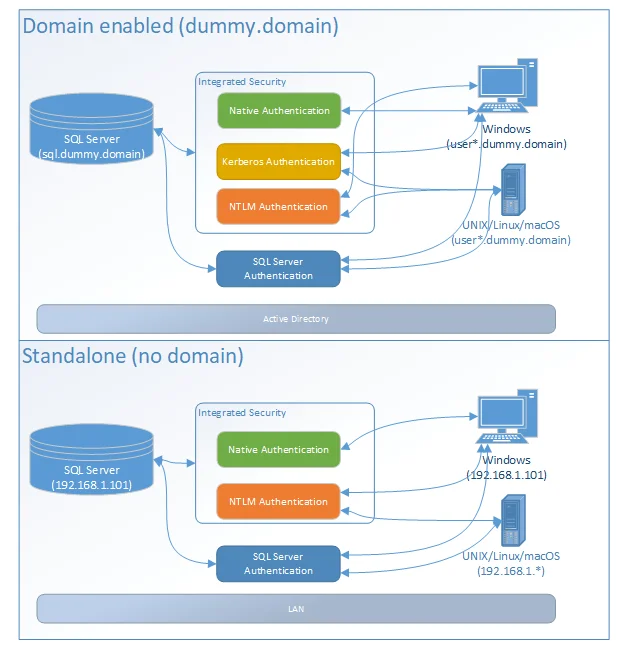

JDBC Integrated Security, NTLM and Kerberos Authentication for SQL Server

Prerequisites

Microsoft JDBC Driver 6.2 +

Download JDBC driver (6.2. or above) from the following location:

Download Microsoft JDBC Driver for SQL Server

I use JDBC driver 7.4.1 with JRE8. Once downloaded, install the exe or extract the zip file to a directory.

Spark 2.x

I'm using SparkSession object in the following sample code which is only available since Spark 2.x.

Sample code

We just need to ensure JDBC URL is in the following format:

jdbc:sqlserver://servername=server_name;integratedSecurity=true;authenticationScheme=JavaKerberos;userName=user@REALM;password=****

For userName and password we can also specify it in SparkSession.read APIs.

Complete sample

Please create a PySpark script file pypark-sql-jdbc-kerberos.py with the following content:

from pyspark.sql import SparkSession

# Create spark session

spark = SparkSession.builder \

.appName('Spark - SQL Server Kerberos Integrated Authentication Example') \

.master('local') \

.getOrCreate()

# Change this to your LANID in your organization domain

user = "raymond"

# Change this to your ogranization domain

domain = "GLOBAL.KONTEXT.TECH"

# Change to your LANID password

pwd = "password"

# Change to your own JDBC URL accordingly especially the server address and port

jdbc_url = """jdbc:sqlserver://MSSQL.GLOBAL.KONTEXT.TECH:1433;

integratedSecurity=true;authenticationScheme=JavaKerberos;

"""

# Change to your own SQL select statement

sql = """

SELECT * FROM sys.tables

"""

# Now read data

df = spark.read \

.format('jdbc') \

.option('driver','com.microsoft.sqlserver.jdbc.SQLServerDriver') \

.option('url',jdbc_url) \

.option('dbtable', '({sql} as src)'.format(sql=sql)) \

.option('user','{user}@{domain}'.format(user=user,domain=domain)) \

.option('password', pwd) \

.load()

df.show()

Remember change the variables accordingly to match with your own environment.

Run the code

Before we run the code, we need to ensure JDBC driver is passed in accordingly. Use the following command to run the application:

spark-submit --jars sqljdbc_7.4\enu\mssql-jdbc-7.4.1.jre8.jar pypark-sql-jdbc-kerberos.py

Make sure jar file path matches the JDBC driver file location of your environment. There can be multiple jar files for different JRE. In the above command, I am using the version for JRE8.

Reference

Refer to the following page about more information regards to Kerberos integrated authentication:

Using Kerberos integrated authentication to connect to SQL Server

Let me know if you have any questions.