TPT (Teradata Parallel Transporter) is a client suit that support multiple instances of data extracting, loading, and updating functions in a scalable, high-speed, parallel-processing environment. It utilize other client tools like FastLoad, FastExport, MultiLoad, etc. It is written with Java thus can run in most of the operating systems incl. Windows. On Windows, it also provides wizard GUI tools to help create TPT jobs easily.

This article provides examples of using TPT to load CSV/TSV files into Teradata. It also provides some resolutions to some common errors.

Teradata environment

Follow the following series to install Teradata and utility tools if you don't have a Teradata environment to work with.

Ensure all utilities are installed.

Create a sample CSV file

Create a sample CSV file named test_tpt.csv with the following content:

ID, Attr, Value, CreatedDate, Comments

1,A,10,2020-09-13,'This is a comment with ,'

2,B,20,2020-09-13,This is a comment without comma.

3,C,30,2020-09-13,This is a comment without comma.

4,D,40,2020-09-13,This is a comment without comma.

The file contains 5 attributes that need to be loaded into Teradata.

Save the file using ASCII encoding.

Create the destination table in Teradata

The table needs to exist in Teradata first before it can be loaded. Run the following SQL statement to create the table:

CREATE SET TABLE TestDb.TPT_TEST_CSV

(

ID VARCHAR(10) NOT NULL,

"Attr" VARCHAR(1),

"Value" VARCHAR(10),

CreatedDate VARCHAR(10),

Comments VARCHAR(255)

)

PRIMARY INDEX(ID);

Create TPT job using wizard

Now we can start to create TPT job using wizards.

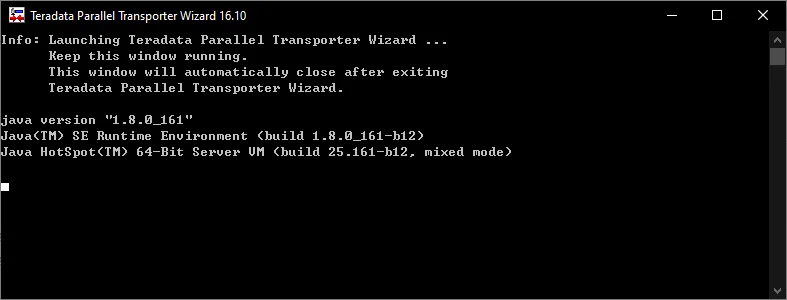

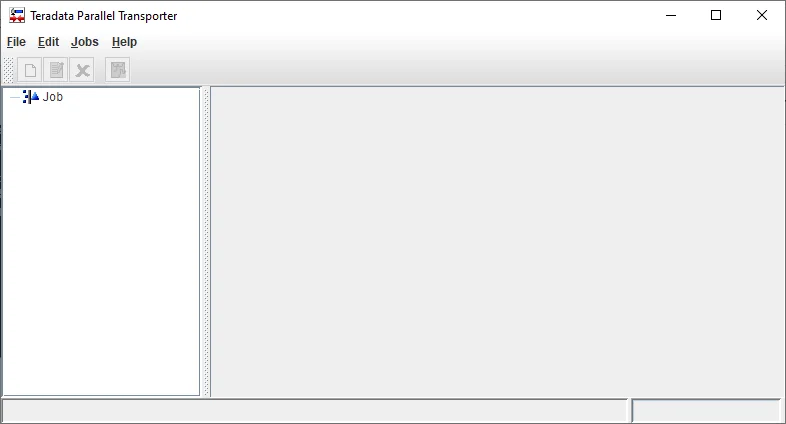

- Open tool Teradata Parallel Transporter Wizard.

It will open two windows: Command Prompt window and TPT Java GUI window as the following screenshots shows:

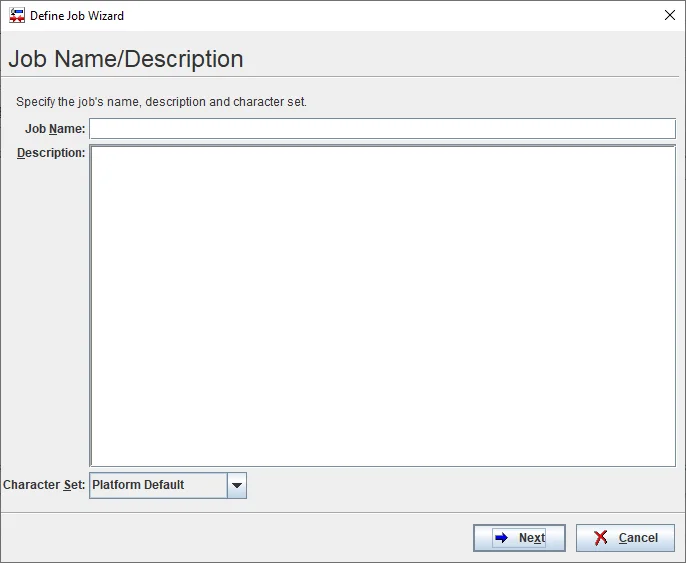

- Click menu Edit and then New to start Job Wizard.

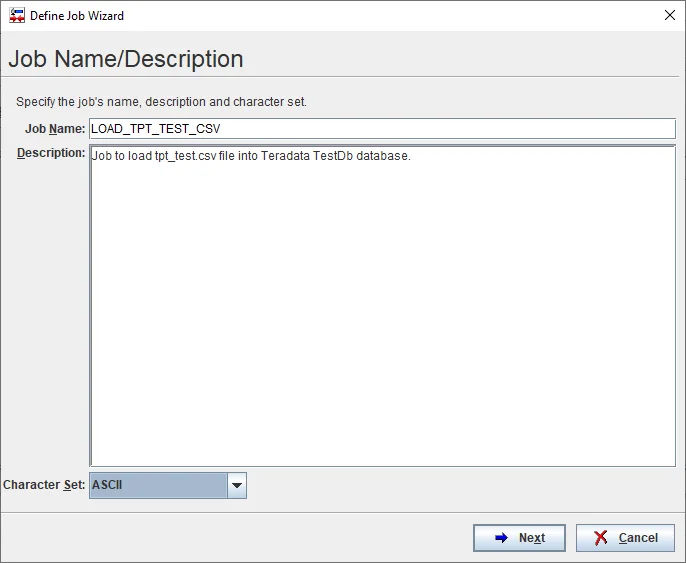

- Input Job details.

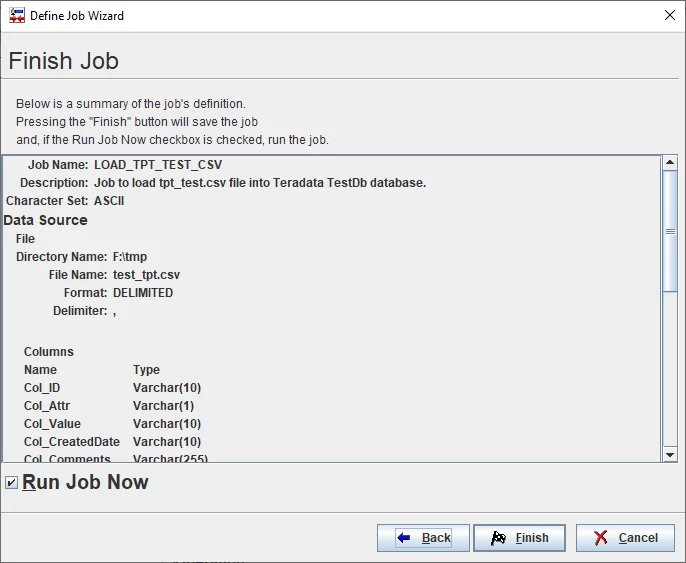

- Job Name: LOAD_TPT_TEST_CSV

- Description: Job to load tpt_test.csv file into Teradata TestDb database.

- Charset: ASCII (since we are not loading files with international characters).

Click Next button.

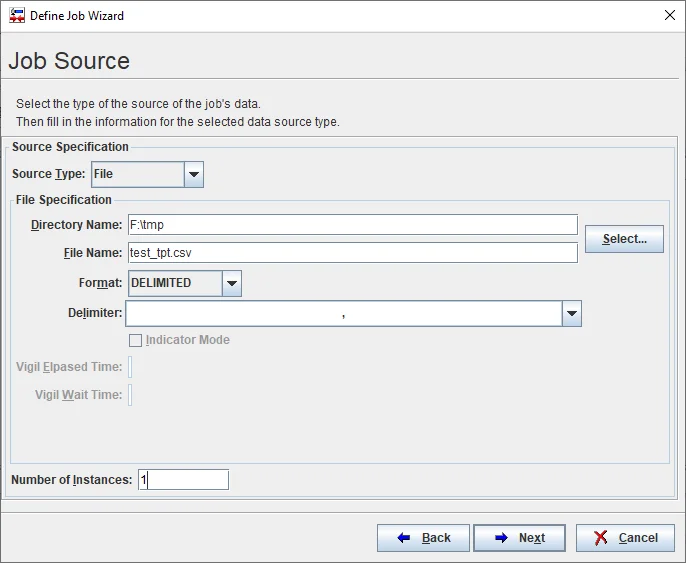

Specify source details accordingly.

- Source type: File

- File Specification: Format = DELIMITED; Delimiter: ,

Click Next button.

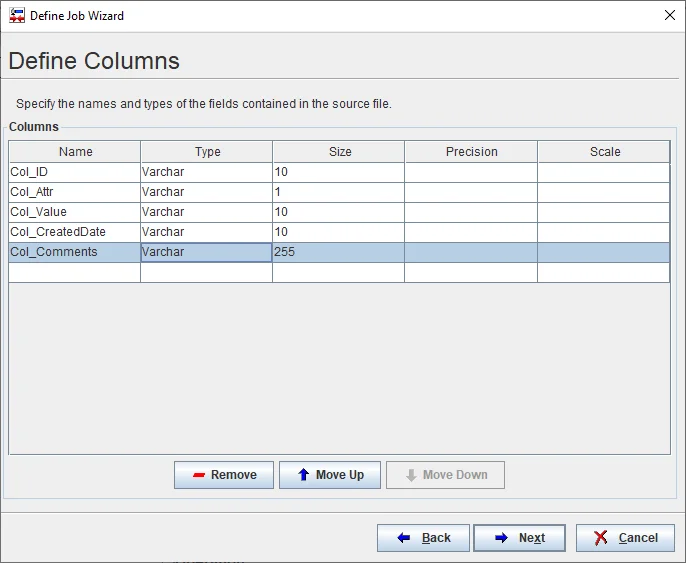

Define columns accordingly. As the source is delimited file, only Varchar type can be implemented here. Ensure the size is big enough to store all the values in the source file.

Click Next button.

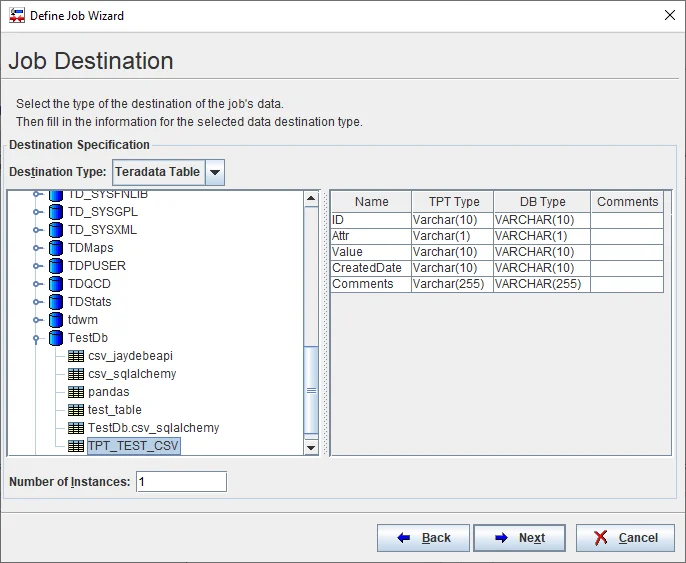

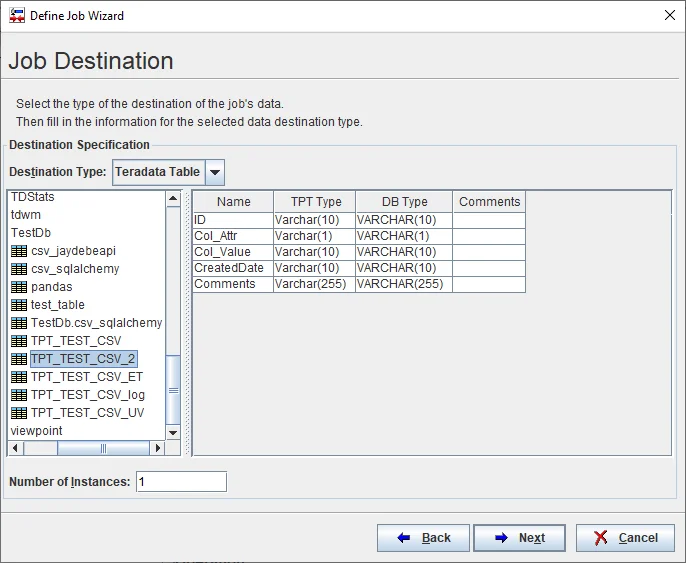

Config job destination details accordingly.

- Destination Type: Teradata Table

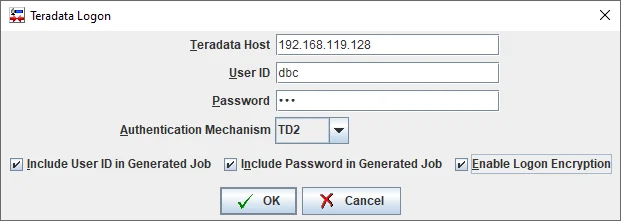

- Input Teradata connection details:

infoIn enterprise environment, authentication mechanism is usually required to be LDAP for better security. Please change the connection details accordingly.

- Select target table as the one created previously.

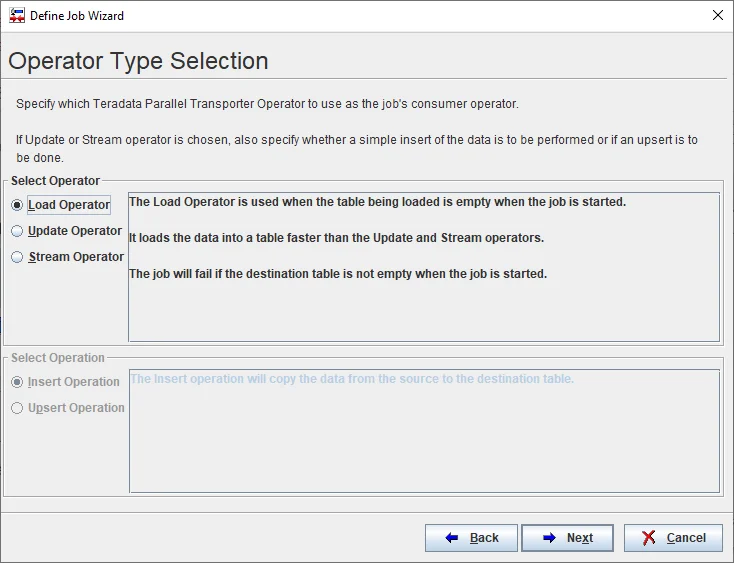

- Select operator type. For this case, select Load Operator. For this operator, the destination table must be empty when the job starts otherwise the job will fail.

infoTPT tool is very powerful to support UPSERT and Stream operators too. These options can be used to stream data into Teradata table from a file that is constantly being updated and or can be used to update existing records or insert new records only.

Click Next button.

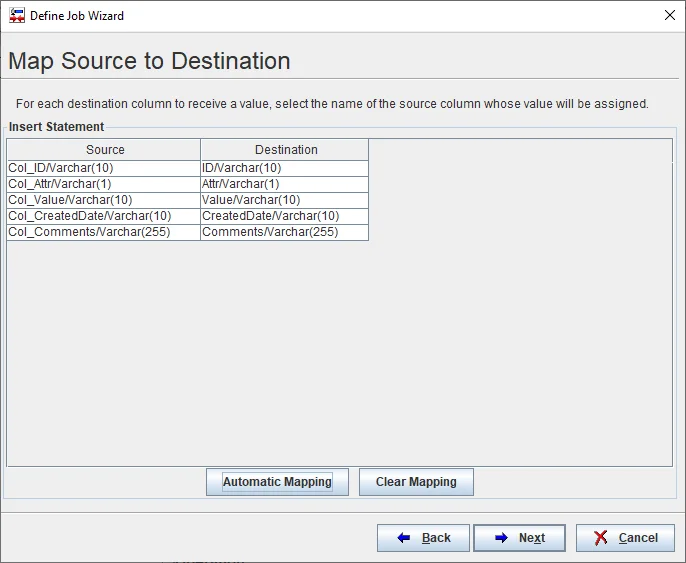

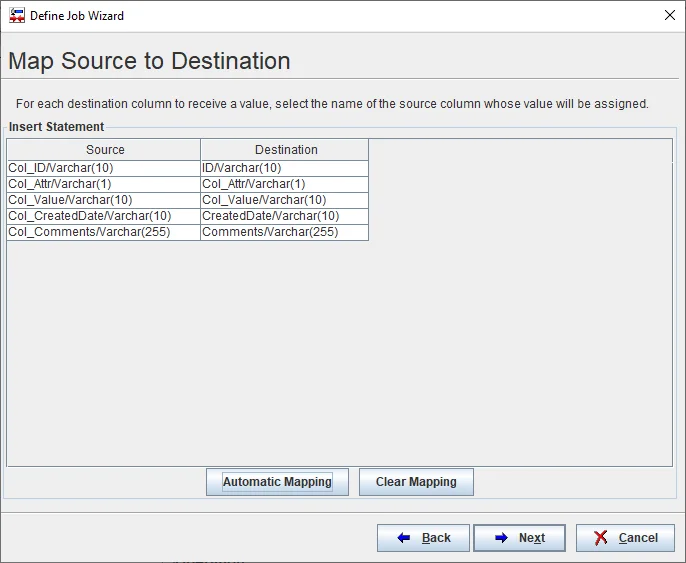

Map columns from source to target:

warning The data types must be matching exactly otherwise you won't be able to complete this step.

Click Next button.

Finish job design. Untick 'Run Job Now' checkbox.

- Click Finish button to complete the job creation wizard.

Run the job

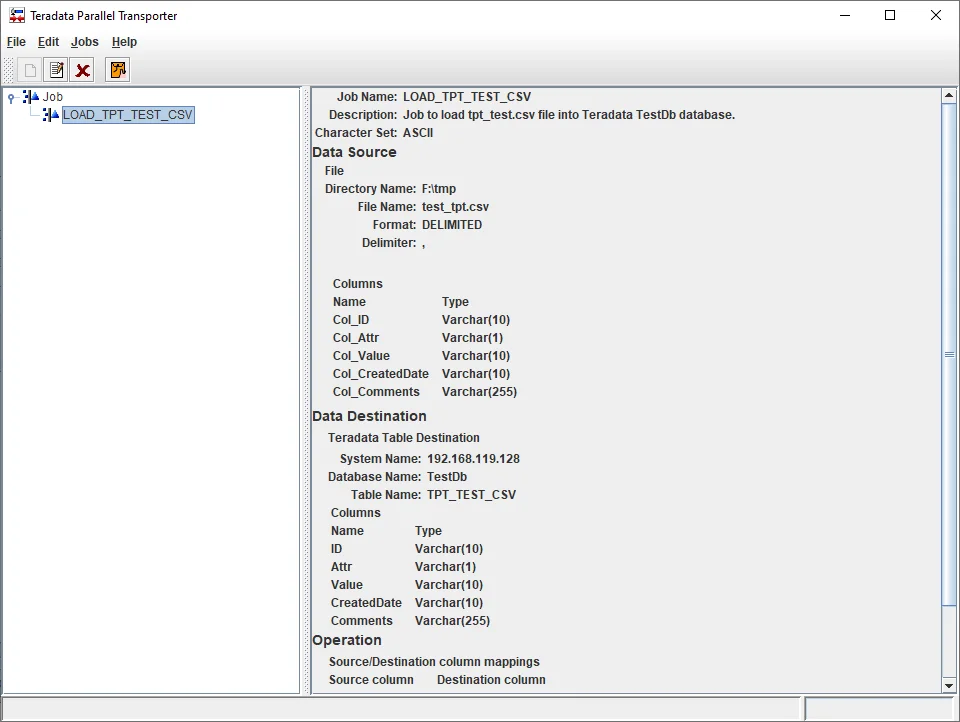

In TPT main window, you will be able to see the job created. You can further edit the job too.

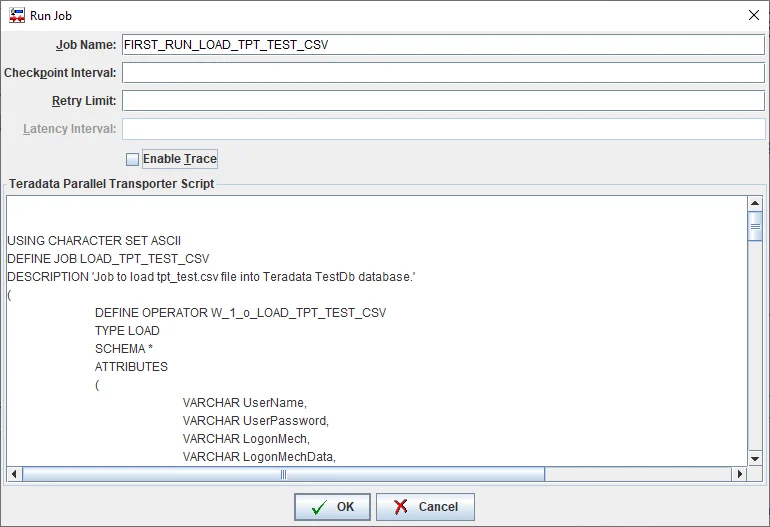

Click button Run job to run the job.

Input an instance job name:

Click OK button to submit the job.

Wait for the job to be completed. For this case, the job will fail due the following error:

TPT10508: RDBMS error 3707: Syntax error, expected something like a name or a Unicode delimited identifier or an 'UDFCALLNAME' keyword between ',' and the 'Value' keyword.

The root cause is because the generated INSERT statement is not valid as there is keywords in table column names:

'INSERT INTO TestDb.TPT_TEST_CSV (ID,Attr,Value,CreatedDate,Comments) VALUES (:Col_ID,:Col_Attr,:Col_Value,:Col_CreatedDate,:Col_Comments);'

To fix this error, we need to edit the job.

Fix the job

- Create another table using the following statement:

CREATE SET TABLE TestDb.TPT_TEST_CSV_2

(

ID VARCHAR(10) NOT NULL,

Col_Attr VARCHAR(1),

Col_Value VARCHAR(10),

CreatedDate VARCHAR(10),

Comments VARCHAR(255)

)

PRIMARY INDEX(ID);

Click Edit button in TPT window.

Navigate to step 'Job Destination' and select the newly created table:

Click Next button till the step of column mapping.

Map columns accordingly.

- Click Finish button to save all the changes.

Run job again

Now we can run the job again.

- Click Run button to submit job and input it a job name:

Click OK button to submit the job.

Wait for the job to be completed. For this case, the job will fail again due the following error:

W_0_o_LOAD_TPT_TEST_CSV[1]: TPT19134 !ERROR! Fatal data error processing file 'F:\tmp\test_tpt.csv'. Delimited Data Parsing error: Column length overflow(s) in row 1.

The above error occurred as the CSV file has a header line. We need to either remove the header line from the file or let TPT know to skip the header line.

Skip header line in CSV file

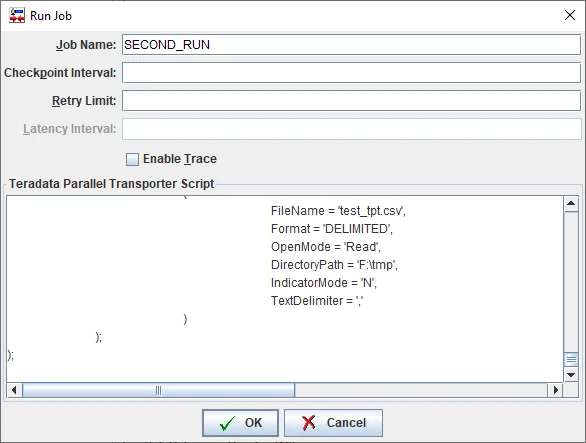

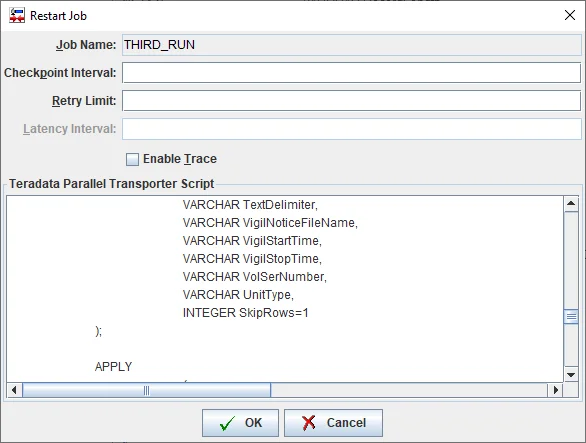

Click submit button again

Input a job name.

Before submit the job add an attribute in Producer to skip header line:

INTEGER SkipRows=1

The job fails with a new error:

W_0_o_LOAD_TPT_TEST_CSV[1]: TPT19134 !ERROR! Fatal data error processing file 'F:\tmp\test_tpt.csv'. Delimited Data Parsing error: Too many columns in row 2.

The error occurred because there is a delimiter in the second row of the CSV file.

Skip delimiter in double quotes

To resolve the issue, we just need to pass in additional attributes when submit the jobs.

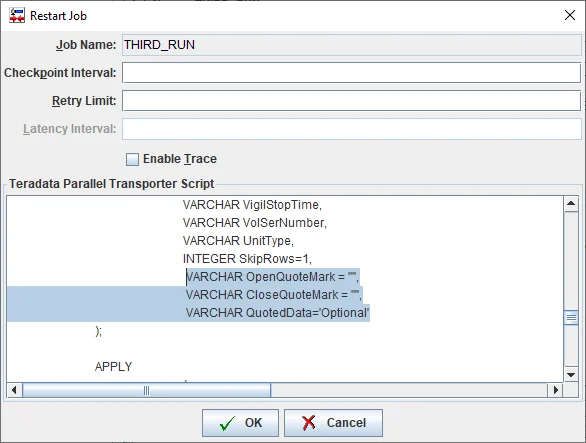

Resubmit the third job run.

Ensure the following attributes are added to the Producer operator:

VARCHAR OpenQuoteMark = '"',

VARCHAR CloseQuoteMark = '"',

VARCHAR QuotedData='Optional'

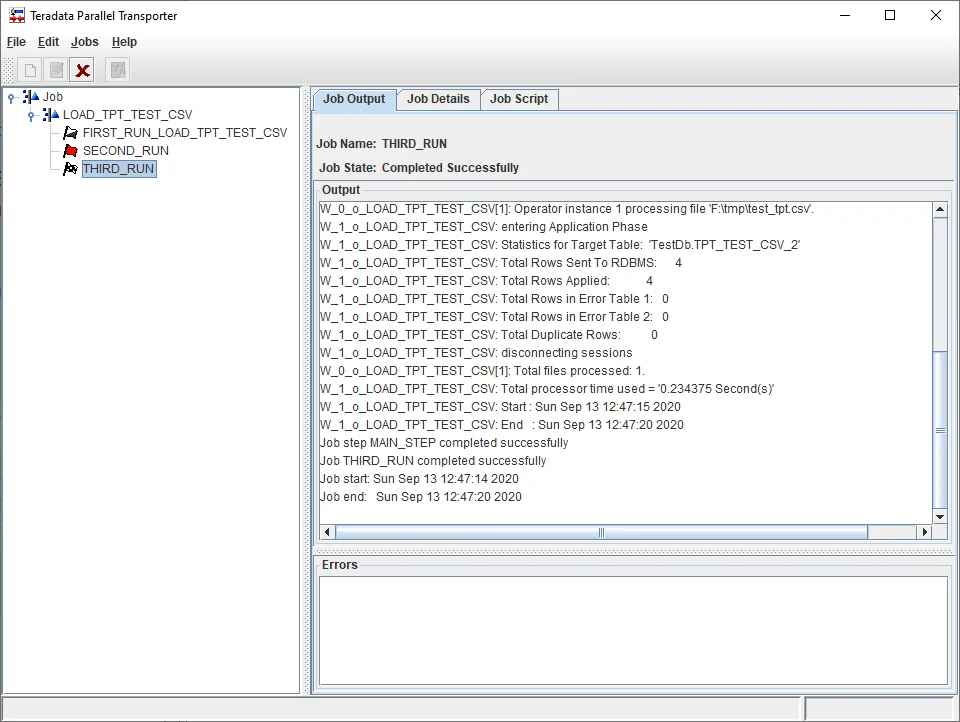

- Wait until the job is completed.

This time the job will complete successfully as the following screenshot shows:

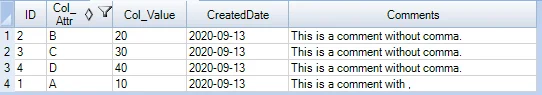

Verify data in database

Run the following statement to verify the data:

SEL * FROM TestDb.TPT_TEST_CSV_2;

The output looks like the following:

The four records are loaded into database successfully.

TPT script

The following is the generated TPT script by the wizard with changes we made to resolve errors:

USING CHARACTER SET ASCII

DEFINE JOB LOAD_TPT_TEST_CSV

DESCRIPTION 'Job to load tpt_test.csv file into Teradata TestDb database.'

(

DEFINE OPERATOR W_1_o_LOAD_TPT_TEST_CSV

TYPE LOAD

SCHEMA *

ATTRIBUTES

(

VARCHAR UserName,

VARCHAR UserPassword,

VARCHAR LogonMech,

VARCHAR LogonMechData,

VARCHAR LogTable,

VARCHAR TargetTable,

INTEGER BufferSize,

INTEGER ErrorLimit,

INTEGER MaxSessions,

INTEGER MinSessions,

INTEGER TenacityHours,

INTEGER TenacitySleep,

VARCHAR AccountID,

VARCHAR DateForm,

VARCHAR ErrorTable1,

VARCHAR ErrorTable2,

VARCHAR NotifyExit,

VARCHAR NotifyExitIsDLL,

VARCHAR NotifyLevel,

VARCHAR NotifyMethod,

VARCHAR NotifyString,

VARCHAR PauseAcq,

VARCHAR PrivateLogName,

VARCHAR TdpId,

VARCHAR TraceLevel,

VARCHAR WorkingDatabase

);

DEFINE SCHEMA W_0_s_LOAD_TPT_TEST_CSV

(

Col_ID VARCHAR(10),

Col_Attr VARCHAR(1),

Col_Value VARCHAR(10),

Col_CreatedDate VARCHAR(10),

Col_Comments VARCHAR(255)

);

DEFINE OPERATOR W_0_o_LOAD_TPT_TEST_CSV

TYPE DATACONNECTOR PRODUCER

SCHEMA W_0_s_LOAD_TPT_TEST_CSV

ATTRIBUTES

(

VARCHAR FileName,

VARCHAR Format,

VARCHAR OpenMode,

INTEGER BlockSize,

INTEGER BufferSize,

INTEGER RetentionPeriod,

INTEGER RowsPerInstance,

INTEGER SecondarySpace,

INTEGER UnitCount,

INTEGER VigilElapsedTime,

INTEGER VigilWaitTime,

INTEGER VolumeCount,

VARCHAR AccessModuleName,

VARCHAR AccessModuleInitStr,

VARCHAR DirectoryPath,

VARCHAR ExpirationDate,

VARCHAR IndicatorMode,

VARCHAR PrimarySpace,

VARCHAR PrivateLogName,

VARCHAR RecordFormat,

VARCHAR RecordLength,

VARCHAR SpaceUnit,

VARCHAR TextDelimiter,

VARCHAR VigilNoticeFileName,

VARCHAR VigilStartTime,

VARCHAR VigilStopTime,

VARCHAR VolSerNumber,

VARCHAR UnitType

,

INTEGER SkipRows=1,

VARCHAR OpenQuoteMark = '"',

VARCHAR CloseQuoteMark = '"',

VARCHAR QuotedData='Optional'

);

APPLY

(

'INSERT INTO TestDb.TPT_TEST_CSV_2 (ID,Col_Attr,Col_Value,CreatedDate,Comments) VALUES (:Col_ID,:Col_Attr,:Col_Value,:Col_CreatedDate,:Col_Comments);'

)

TO OPERATOR

(

W_1_o_LOAD_TPT_TEST_CSV[1]

ATTRIBUTES

(

UserName = 'dbc',

UserPassword = 'dbc',

LogonMech = 'TD2',

LogTable = 'TestDb.TPT_TEST_CSV_2_log',

TargetTable = 'TestDb.TPT_TEST_CSV_2',

TdpId = '192.168.119.128'

)

)

SELECT * FROM OPERATOR

(

W_0_o_LOAD_TPT_TEST_CSV[1]

ATTRIBUTES

(

FileName = 'test_tpt.csv',

Format = 'DELIMITED',

OpenMode = 'Read',

DirectoryPath = 'F:\tmp',

IndicatorMode = 'N',

TextDelimiter = ','

)

);

);

With TPT scripts, we can directly run the job using tbuildcommand line.

Save the script as file load_tpt_csv.tpt.

Run the following command to run the job:

tbuild -f load_tpt_csv.tpt

The command line can be scheduled to run regularly.