Follow this guide to install Kontext Copilot (the tool).

Prerequisites

Python 3.9+

The tool is created with Python 3.9.17 and should work with higher versions of Python too. Please go to Python official website to download and install it if not done already.

Ollama

Ollama can help to get up and running with large language models (LLMs) on your laptops. You can use it to run Llama 3.1, Phi 3), Mistral, Gemma 2, and other models. Go to Download Ollama to download and install Ollama.

Kontext Copilot is aimed to work with majority of LLM hosting tools or services including, Ollama, LM Studio, vllm, AWS Bedrock, etc. However, due to time limitation, Ollama is the main one supported and tested for now. Other services will be integrated gradually.

Once Ollama is installed, please pull down at least one of the following models (or any other models you prefer):

ollama pull phi3:3.8b

ollama pull llama3.1:8b

llama3.1:8b or higher is recommended for laptop with powerful hardware.

For Windows system, please make sure Ollama service is running:

ollama ps

If it is not running, please use the following command to start the daemon service:

ollama serve

Computer with GPU (not mandatory)

LLMs run faster with GPU. For loading phi3.5 8b model, at least 3.5Gib GPU is required; for llama 3.1 8b model, 6GB GPU capacity is recommended.

For Nvidia GPUs, you can check the details using nvidia-smi :

nvidia-smi

Install the tool

Now you can create a virtual environment (optional) to install Kontext Copilot tool.

Create virtual environment:

python3 -m venv .venv-kontext-copilotActivate the virtual environment:

# for Windows: .venv-kontext-copilot\Scripts\activate # for macOs or UNIX/Linux source .venv-kontext-copilot/bin/activateInstall the package:

pip install kontext-copilotDone

Launch the tool

Run the following command to start Kontext Copilot:

kontext-copilot

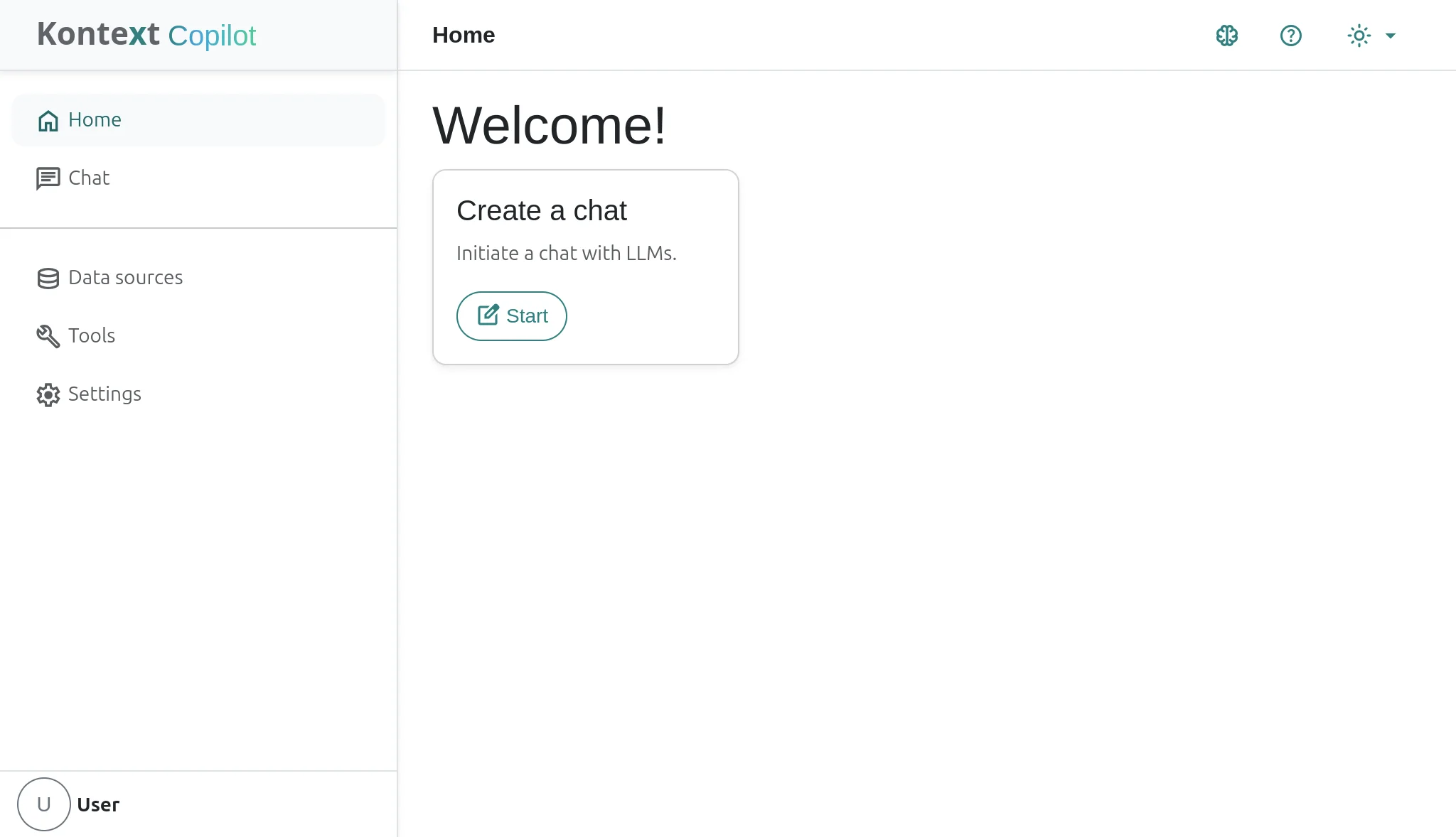

Verify the website

Open the following URL in your browser:

You should be able to see a website like the following screenshot:

Reinstall

Run the following command to force the reinstall of the latest version of Kontext Copilot:

pip install --force-reinstall kontext-copilot

Source code

Get started

Refer to this guide to get started: Get started with Kontext Copilot.

Debug errors

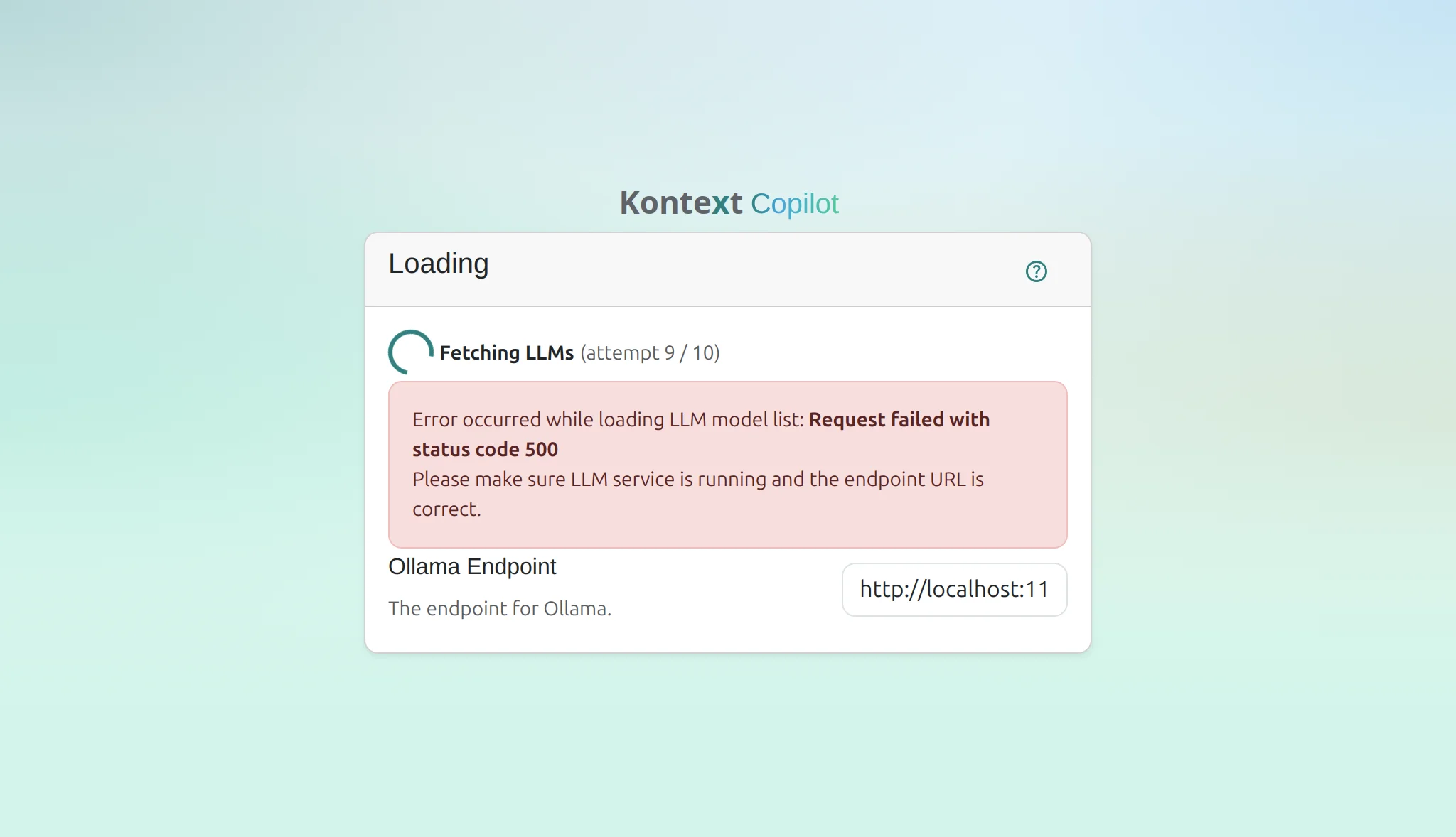

LLMs not running

If you encounter error like the following, please ensure Ollama service is running.

Error occurred while loading LLM model list: Request failed with status code 500 Please make sure LLM service is running and the endpoint URL is correct.

For any other errors, feel free to leave a comment here.

For any other errors, feel free to leave a comment here.