Data Intelligence Platform

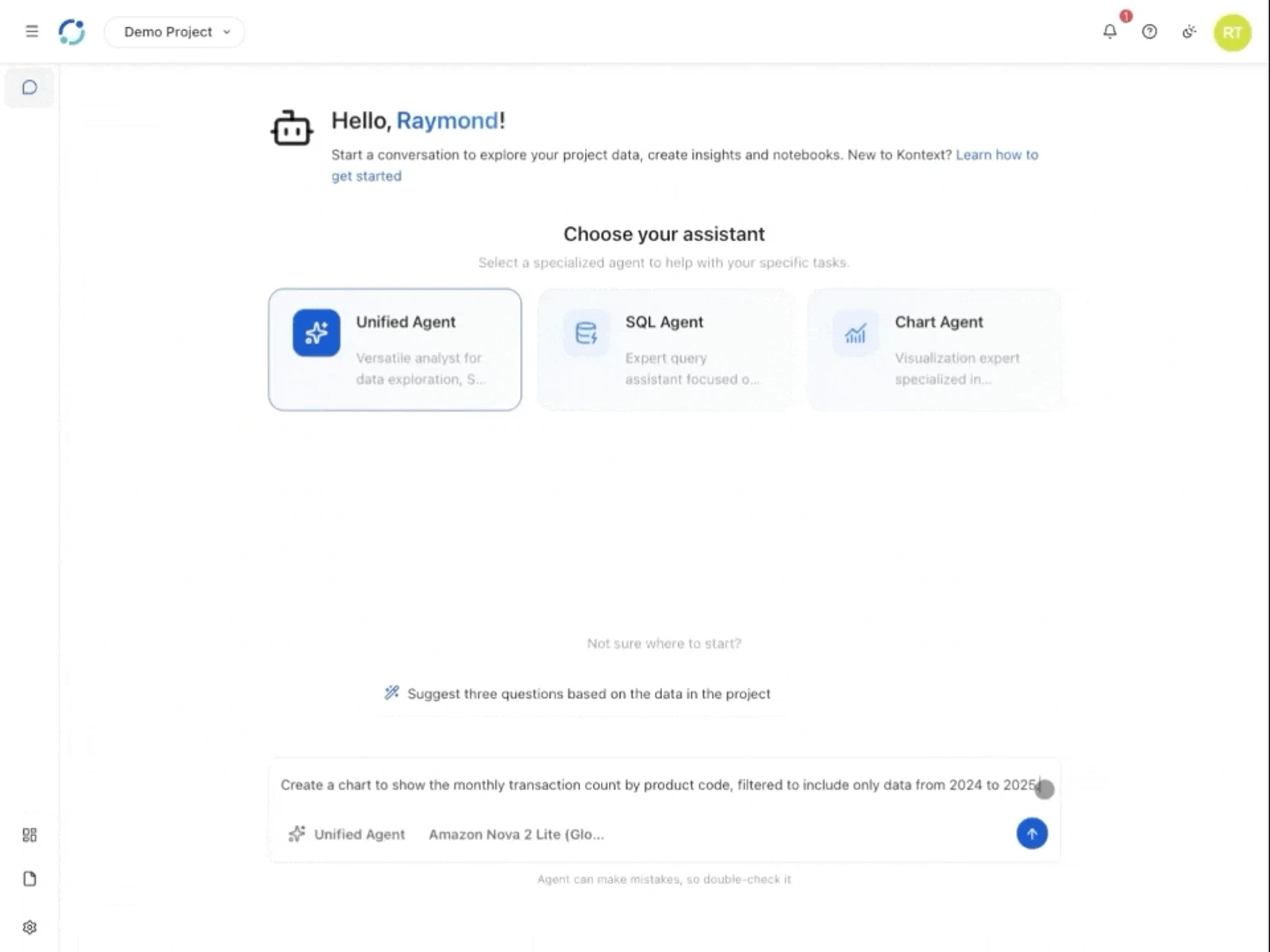

Kontext Labs is a next-gen data intelligence platform that transforms data into insights in minutes. Autonomous data agents analyze, visualize, and deliver actionable insights—using natural languages.

The Context of Your Data

Scale your analytics maturity from simple questions to autonomous data science.

Ask Data Questions

Query your data using natural language simply and effectively.

Visualise Key Trends

Generate instant charts to spot trends and outliers visually.

Create Notebooks

Deep dive with notebooks combining code, visuals, and narrative.

Automate Data Science

Our agent creates code and ML workflows for forecasting.

Data intelligence,

simplified.

A unified platform designed to bridge the gap between technical complexity and business value.

We've built a 'non-technical first' interface that doesn't compromise on power. Whether you are a Business Owner or an Analyst, the platform adapts to your needs.

Deep Data Understanding

Ask questions like 'Melbourne Sales' and get instant trends, analyzing actual content beyond just metadata.

Collaborative AI Agents and Teams

Specialized agents for BI and Data Science allow teams to co-edit and share artifacts instantly.

Start small and grow at scale. Our architecture is designed to handle massive volume without the high costs usually associated with big data.

Elastic Scalability

Seamlessly expand from a single table to thousands without re-architecting.

Multi-Cloud Ready

Full support for Azure and AWS, with GCP integration in progress.

Maintain full control over your data. We support your data sovereignty with flexible deployment options for strict enterprise environments.

Private Cloud Deployment

Deploy directly into your own environment for maximum data sovereignty.

Regional Data Residency

Deploy to sovereign regions to ensure data isolation and residency.

From Data to Insights

A seamless, autonomous loop that powers your decision-making.

Your Command

Guide your data's journey using simple, natural conversation.

1. Connect Your Data

Easily bring in information from your databases, files, or the cloud.

2. Intelligent Data Lake

Your data is automatically organized and made ready for exploration.

3. Assistant Agents

AI helpers that answer questions, find patterns, and create reports instantly.

4. Shared Insights

Beautiful charts and notes that capture every discovery, ready to share with your team.

Your Command

Guide your data's journey using simple, natural conversation and collaborative tools.

1. Connect Your Data

Easily bring in information from your databases, files, or the cloud.

2. Intelligent Data Lake

Your data is automatically organized and made ready for exploration.

3. Assistant Agents

AI helpers that answer questions, find patterns, and create reports instantly.

4. Shared Insights

Beautiful charts and notes that capture every discovery, ready to share with your team.

Latest Updates

Stay informed with our latest news, features, and insights. View all articles

Join Kontext Labs Platform Pilot

We invite Everyone—AI Enthusiasts, Business Owners, Researchers, Students, and Analysts—to join our pilot phase and help shape the future of data intelligence.

Kontext Platform Sign-Up Update

Kontext Charts Colour Palettes

Start Analyzing Data Today

Upload files, query using natural languages, and let data agents turn results into visuals and insights.