This detailed step-by-step guide shows you how to install the latest Hadoop (v3.2.1) on Windows 10. It's based on the previous articles I published with some updates to reflect the feedback collected from readers to make it easier for everyone to install.

Please follow all the instructions carefully. Once you complete the steps, you will have a shiny pseudo-distributed single node Hadoop to work with.

*The yellow elephant logo is a registered trademark of Apache Hadoop; the blue window logo is registered trademark of Microsoft.

References

Refer to the following articles if you prefer to install other versions of Hadoop or if you want to configure a multi-node cluster or using WSL.

- Install Hadoop 3.0.0 on Windows (Single Node)

- Configure Hadoop 3.1.0 in a Multi Node Cluster

- Install Hadoop 3.2.0 on Windows 10 using Windows Subsystem for Linux (WSL)

Required tools

Before you start, make sure you have these following tools enabled in Windows 10.

| Tool | Comments |

|---|---|

| PowerShell | We will use this tool to download package. In my system, PowerShell version table is listed below: <br>$PSversionTable<br><br>Name Value<br>---- -----<br>PSVersion 5.1.18362.145<br>PSEdition Desktop<br>PSCompatibleVersions {1.0, 2.0, 3.0, 4.0...}<br>BuildVersion 10.0.18362.145<br>CLRVersion 4.0.30319.42000<br>WSManStackVersion 3.0<br>PSRemotingProtocolVersion 2.3<br>SerializationVersion 1.1.0.1<br> |

| Git Bash or 7 Zip | We will use Git Bash or 7 Zip to unzip Hadoop binary package. You can choose to install either tool or any other tool as long as it can unzip *.tar.gz files on Windows. |

| Command Prompt | We will use it to start Hadoop daemons and run some commands as part of the installation process. |

| Java JDK | JDK is required to run Hadoop as the framework is built using Java. In my system, my JDK version is jdk1.8.0_161. Check out the supported JDK version on the following page. https://cwiki.apache.org/confluence/display/HADOOP/Hadoop+Java+Versions |

Now we will start the installation process.

Step 1 - Download Hadoop binary package

Select download mirror link

Go to download page of the official website:

Apache Download Mirrors - Hadoop 3.2.1

And then choose one of the mirror link. The page lists the mirrors closest to you based on your location. For me, I am choosing the following mirror link:

http://apache.mirror.digitalpacific.com.au/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz

info In the following sections, this URL will be used to download the package. Your URL might be different from mine and you can replace the link accordingly.

Download the package

info In this guide, I am installing Hadoop in folder big-data of my F drive (F:\big-data). If you prefer to install on another drive, please remember to change the path accordingly in the following command lines. This directory is also called destination directory in the following sections.

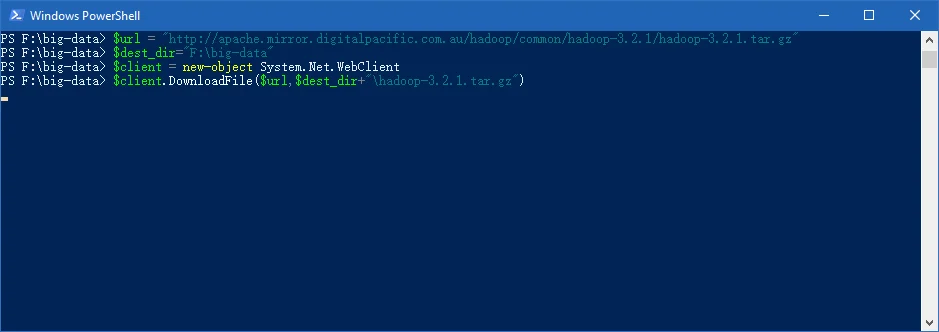

Open PowerShell and then run the following command lines one by one:

$dest_dir="F:\big-data"

$url = "http://apache.mirror.digitalpacific.com.au/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz"

$client = new-object System.Net.WebClient

$client.DownloadFile($url,$dest_dir+"\hadoop-3.2.1.tar.gz")

It may take a few minutes to download.

It may take a few minutes to download.

Once the download completes, you can verify it:

PS F:\big-data> cd $dest_dir

PS F:\big-data> ls

Directory: F:\big-data

Mode LastWriteTime Length Name

---- ------------- ------ ----

-a---- 18/01/2020 11:01 AM 359196911 hadoop-3.2.1.tar.gz

PS F:\big-data>

You can also directly download the package through your web browser and save it to the destination directory.

warning Please keep this PowerShell window open as we will use some variables in this session in the following steps. If you already closed it, it is okay, just remember to reinitialise the above variables: $client, $dest_dir.

Step 2 - Unpack the package

Now we need to unpack the downloaded package using GUI tool (like 7 Zip) or command line. For me, I will use git bash to unpack it.

Open git bash and change the directory to the destination folder:

cd F:/big-data

And then run the following command to unzip:

tar -xvzf hadoop-3.2.1.tar.gz

The command will take quite a few minutes as there are numerous files included and the latest version introduced many new features.

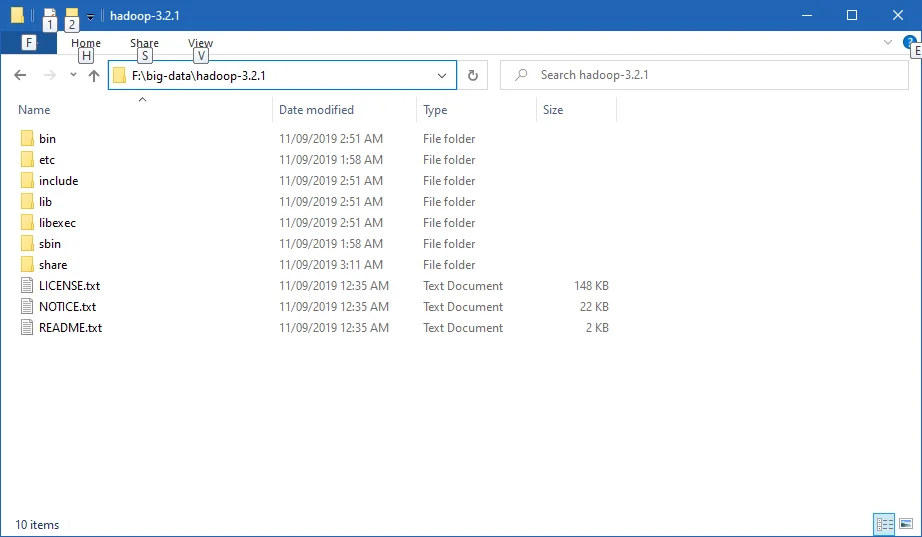

After the unzip command is completed, a new folder hadoop-3.2.1 is created under the destination folder.

info When running the command you will experience errors like the following:

tar: hadoop-3.2.1/lib/native/libhadoop.so: Cannot create symlink to ‘libhadoop.so.1.0.0’: No such file or directory

Please ignore it for now as those native libraries are for Linux/UNIX and we will create Windows native IO libraries in the following steps.

Step 3 - Install Hadoop native IO binary

Hadoop on Linux includes optional Native IO support. However Native IO is mandatory on Windows and without it you will not be able to get your installation working. The Windows native IO libraries are not included as part of Apache Hadoop release. Thus we need to build and install it.

I also published another article with very detailed steps about how to compile and build native Hadoop on Windows: Compile and Build Hadoop 3.2.1 on Windows 10 Guide.

The build may take about one hourand to save our time, we can just download the binary package from github.

infoThe following repository already pre-built Hadoop Windows native libraries for us: https://github.com/cdarlint/winutils

warning These libraries are not signed and there is no guarantee that it is 100% safe. We use if purely for test&learn purpose.

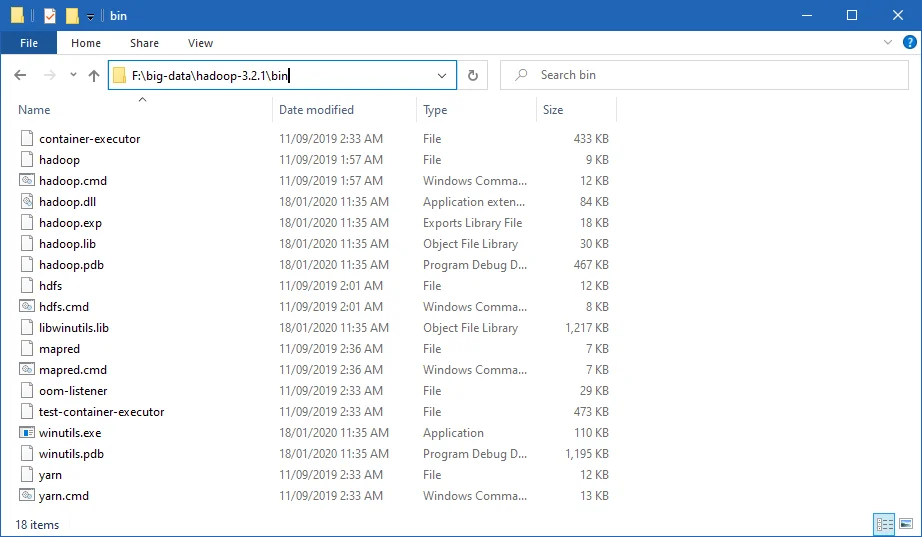

Download all the files in the following location and save them to the bin folder under Hadoop folder. For my environment, the full path is: F:\big-data\hadoop-3.2.1\bin. Remember to change it to your own path accordingly.

Alternatively, you can run the following commands in the previous PowerShell window to download:

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/hadoop.dll",$dest_dir+"\hadoop-3.2.1\bin\"+"hadoop.dll")

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/hadoop.exp",$dest_dir+"\hadoop-3.2.1\bin\"+"hadoop.exp")

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/hadoop.lib",$dest_dir+"\hadoop-3.2.1\bin\"+"hadoop.lib")

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/hadoop.pdb",$dest_dir+"\hadoop-3.2.1\bin\"+"hadoop.pdb")

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/libwinutils.lib",$dest_dir+"\hadoop-3.2.1\bin\"+"libwinutils.lib")

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/winutils.exe",$dest_dir+"\hadoop-3.2.1\bin\"+"winutils.exe")

$client.DownloadFile("https://github.com/cdarlint/winutils/raw/master/hadoop-3.2.1/bin/winutils.pdb",$dest_dir+"\hadoop-3.2.1\bin\"+"winutils.pdb")

After this, the bin folder looks like the following:

Step 4 - (Optional) Java JDK installation

Java JDK is required to run Hadoop. If you have not installed Java JDK please install it.

You can install JDK 8 from the following page:

Once you complete the installation, please run the following command in PowerShell or Git Bash to verify:

$ java -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

If you got error about 'cannot find java command or executable'. Don't worry we will resolve this in the following step.

Step 5 - Configure environment variables

Now we've downloaded and unpacked all the artefacts we need to configure two important environment variables.

Configure JAVA\_HOME environment variable

As mentioned earlier, Hadoop requires Java and we need to configure JAVA_HOME environment variable (though it is not mandatory but I recommend it).

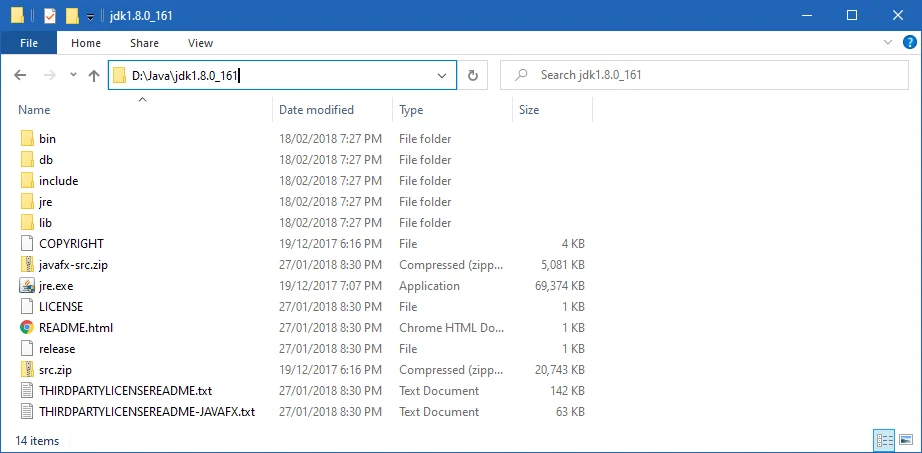

First, we need to find out the location of Java SDK. In my system, the path is: D:\Java\jdk1.8.0_161.

Your location can be different depends on where you install your JDK.

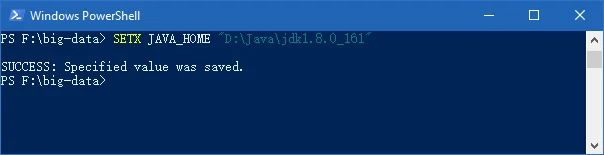

And then run the following command in the previous PowerShell window:

SETX JAVA_HOME "D:\Java\jdk1.8.0_161"

Remember to quote the path especially if you have spaces in your JDK path.

infoYou can setup evironment variable at system by adding option /M however just in case you don't have access to change system variables, you can just set it up at user level.

The output looks like the following:

Configure HADOOP\_HOME environment variable

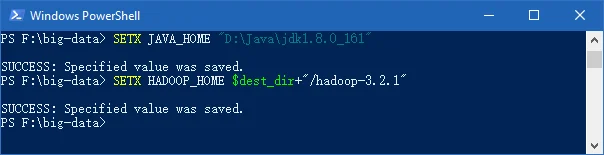

Similarly we need to create a new environment variable for HADOOP_HOME using the following command. The path should be your extracted Hadoop folder. For my environment it is: F:\big-data\hadoop-3.2.1.

If you used PowerShell to download and if the window is still open, you can simply run the following command:

SETX HADOOP_HOME $dest_dir+"/hadoop-3.2.1"

The output looks like the following screenshot:

Alternatively, you can specify the full path:

SETX HADOOP_HOME "F:\big-data\hadoop-3.2.1"

Now you can also verify the two environment variables in the system:

Configure PATH environment variable

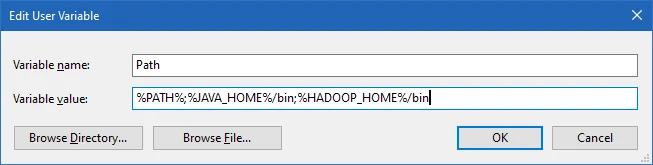

Once we finish setting up the above two environment variables, we need to add the binfolders to the PATHenvironment variable.

If PATHenvironment exists in your system, you can also manually add the following two paths to it:

- %JAVA_HOME%/bin

- %HADOOP_HOME%/bin

Alternatively, you can run the following command to add them:

setx PATH "$env:PATH;$env:JAVA_HOME/bin;$env:HADOOP_HOME/bin"

If you don't have other user variables setup in the system, you can also directly add a Pathenvironment variable that references others to make it short:

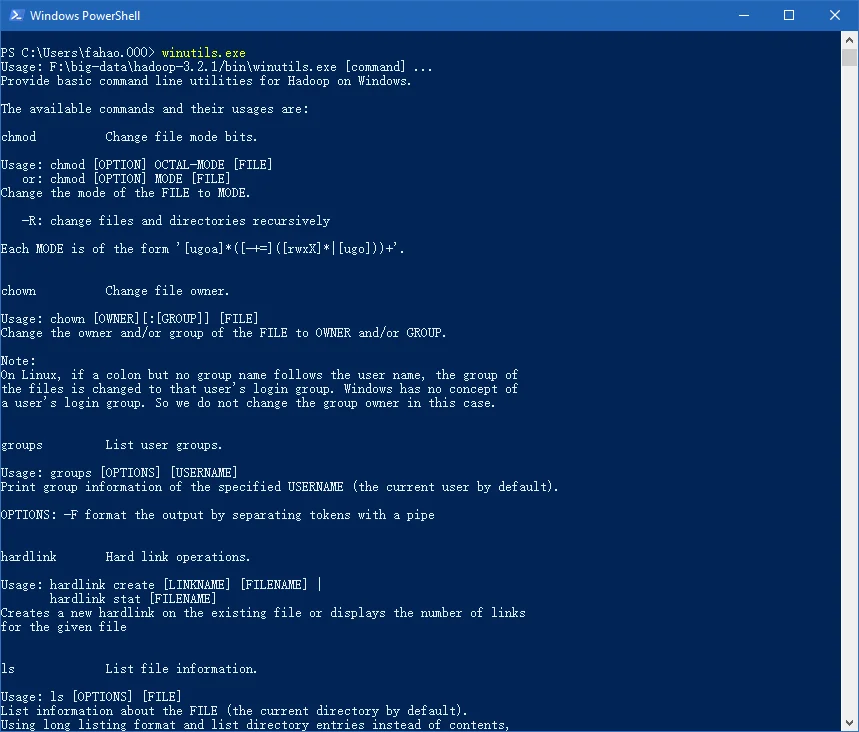

Close PowerShell window and open a new one and type winutils.exe directly to verify that our above steps are completed successfully:

You should also be able to run the following command:

hadoop -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

Step 6 - Configure Hadoop

Now we are ready to configure the most important part - Hadoop configurations which involves Core, YARN, MapReduce, HDFS configurations.

Configure core site

Edit file core-site.xml in %HADOOP_HOME%\etc\hadoopfolder. For my environment, the actual path is F:\big-data\hadoop-3.2.1\etc\hadoop.

Replace configurationelement with the following:

<configuration> <property> <name>fs.default.name</name> <value>hdfs://0.0.0.0:19000</value> </property>

</configuration>

Configure HDFS

Edit file **hdfs-site.**xml in %HADOOP_HOME%\etc\hadoop folder.

Before editing, please correct two folders in your system: one for namenode directory and another for data directory. For my system, I created the following two sub folders:

- F:\big-data\data\dfs\namespace_logs

- F:\big-data\data\dfs\data

Replace configuration element with the following (remember to replace the highlighted paths accordingly):

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:///F:/big-data/data/dfs/namespace_logs</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:///F:/big-data/data/dfs/data</value> </property>

</configuration>

In Hadoop 3, the property names are slightly different from previous version. Refer to the following official documentation to learn more about the configuration properties:

infoFor DFS replication we configure it as one as we are configuring just one single node. By default the value is 3.

infoThe directory configuration are not mandatory and by default it will use Hadoop temporary folder. For our tutorial purpose, I would recommend customise the values.

Configure MapReduce and YARN site

Edit file mapred-site.xml in %HADOOP_HOME%\etc\hadoop folder.

Replace configuration element with the following:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>%HADOOP_HOME%/share/hadoop/mapreduce/*,%HADOOP_HOME%/share/hadoop/mapreduce/lib/*,%HADOOP_HOME%/share/hadoop/common/*,%HADOOP_HOME%/share/hadoop/common/lib/*,%HADOOP_HOME%/share/hadoop/yarn/*,%HADOOP_HOME%/share/hadoop/yarn/lib/*,%HADOOP_HOME%/share/hadoop/hdfs/*,%HADOOP_HOME%/share/hadoop/hdfs/lib/*</value>

</property>

</configuration>

Edit file yarn-site.xml in %HADOOP_HOME%\etc\hadoop folder.

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

Step 7 - Initialise HDFS & bug fix

Run the following command in Command Prompt

hdfs namenode -format

This command failed with the following error and we need to fix it:

2020-01-18 13:36:03,021 ERROR namenode.NameNode: Failed to start namenode.

java.lang.UnsupportedOperationException

at java.nio.file.Files.setPosixFilePermissions(Files.java:2044)

at org.apache.hadoop.hdfs.server.common.Storage$StorageDirectory.clearDirectory(Storage.java:452)

at org.apache.hadoop.hdfs.server.namenode.NNStorage.format(NNStorage.java:591)

at org.apache.hadoop.hdfs.server.namenode.NNStorage.format(NNStorage.java:613)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:188)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:1206)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1649)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1759)

2020-01-18 13:36:03,025 INFO util.ExitUtil: Exiting with status 1: java.lang.UnsupportedOperationException

Refer to the following sub section (About 3.2.1 HDFS bug on Windows) about the details of fixing this problem.

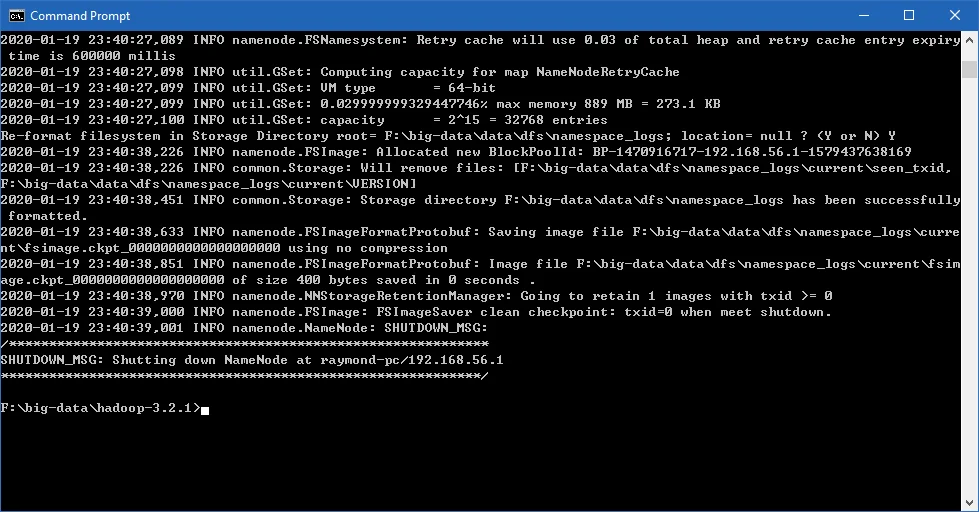

Once this is fixed, the format command (hdfs namenode -format) will show something like the following:

About 3.2.1 HDFS bug on Windows

This is a bug with 3.2.1 release:

https://issues.apache.org/jira/browse/HDFS-14890https://issues.apache.org/jira/browse/HDFS-14890

It will be resolved in version 3.2.2 and 3.3.0.

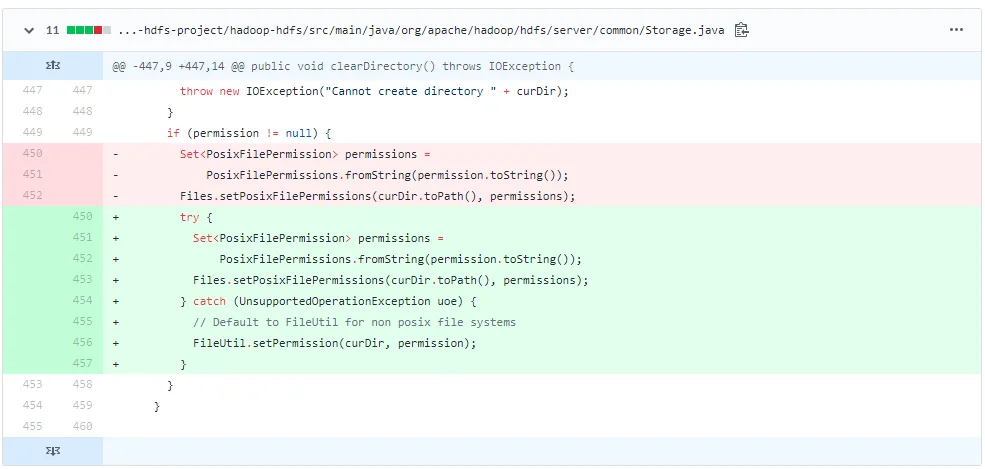

We can apply a temporary fix as the following change diff shows:

I've done the following to get this temporarily fixed before 3.2.2/3.3.0 is released:

- Checkout the source code of Hadoop project from GitHub.

- Checkout branch 3.2.1

- Open pom file of hadoop-hdfs project

- Update class StorageDirectory as described in the above code diff screen shot:

if (permission != null) {

try {

Set<PosixFilePermission> permissions =

PosixFilePermissions.fromString(permission.toString());

Files.setPosixFilePermissions(curDir.toPath(), permissions);

} catch (UnsupportedOperationException uoe) {

// Default to FileUtil for non posix file systems

FileUtil.setPermission(curDir, permission);

}

}

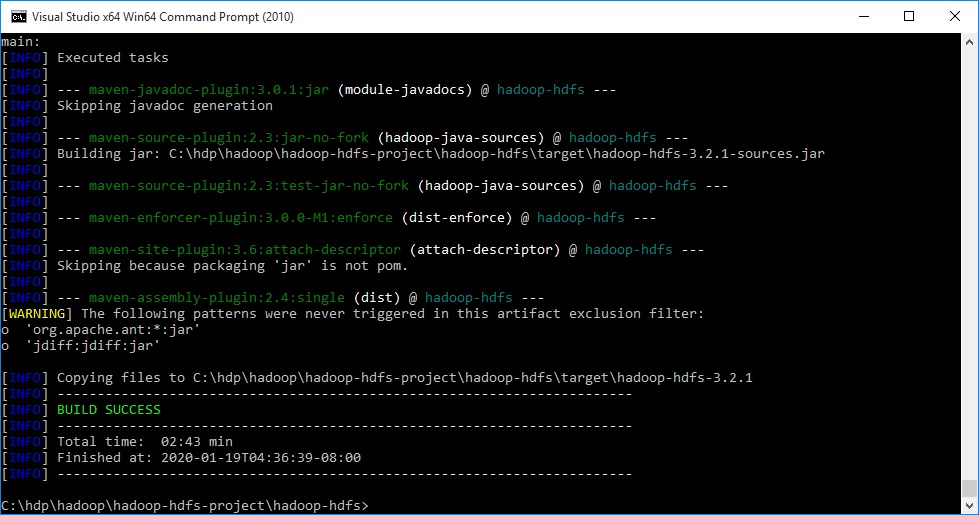

- Use Maven to rebuild this project as the following screenshot shows:

Fix bug HDFS-14890

I've uploaded the JAR file into the following location. Please download it from the following link:

https://github.com/FahaoTang/big-data/blob/master/hadoop-hdfs-3.2.1.jar

And then rename the file name hadoop-hdfs-3.2.1.jar to hadoop-hdfs-3.2.1.bk in folder %HADOOP_HOME%\share\hadoop\hdfs.

Copy the downloaded hadoop-hdfs-3.2.1.jar to folder %HADOOP_HOME%\share\hadoop\hdfs.

warning This is just a temporary fix before the official improvement is published. I publish it purely for us to complete the whole installation process and there is no guarantee this temporary fix won't cause any new issue.

Refer to this article for more details about how to build a native Windows Hadoop: Compile and Build Hadoop 3.2.1 on Windows 10 Guide.

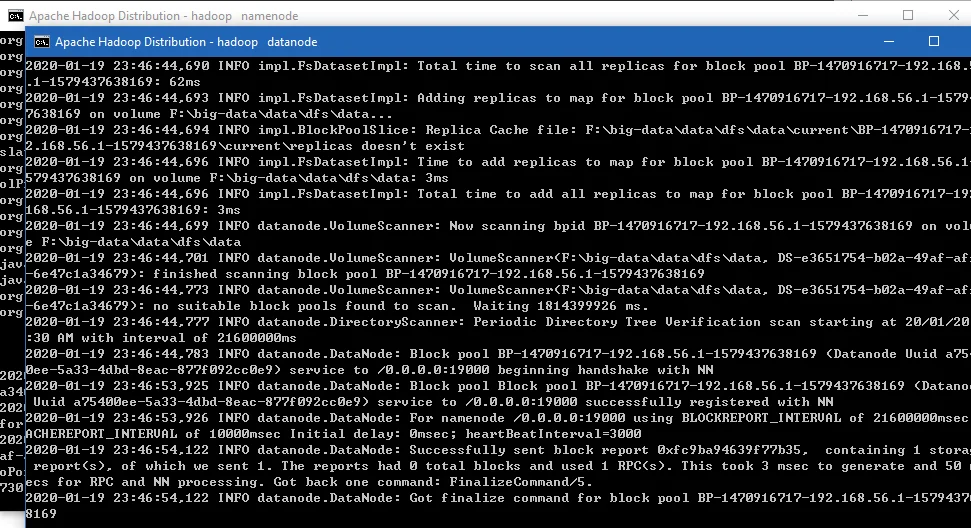

Step 8 - Start HDFS daemons

Run the following command to start HDFS daemons in Command Prompt:

%HADOOP_HOME%\sbin\start-dfs.cmdTwo Command Prompt windows will open: one for datanode and another for namenode as the following screenshot shows:

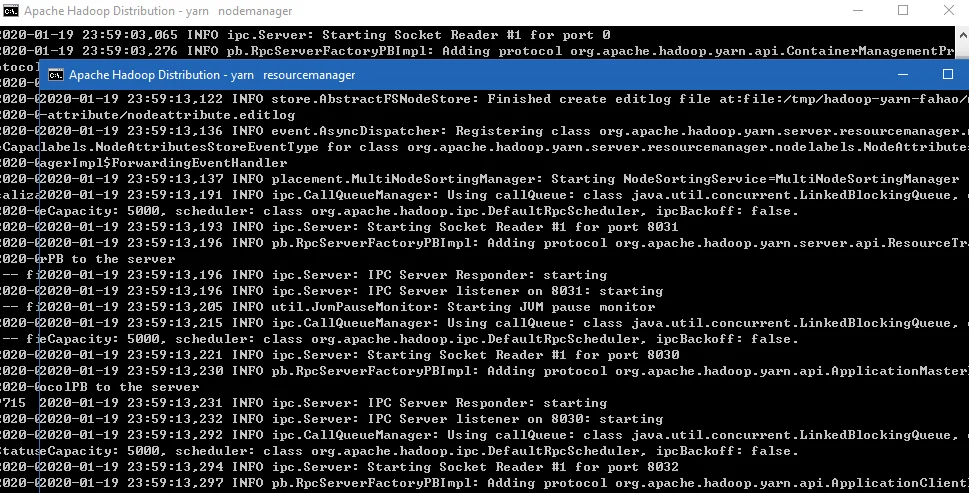

Step 9 - Start YARN daemons

warning You may encounter permission issues if you start YARN daemons using normal user. To ensure you don't encounter any issues. Please open a Command Prompt window using Run as administrator. Alternatively, you can follow this comment on this page which doesn't require Administrator permission using a local Windows account: /project/hadoop/article/latest-hadoop-321-installation-on-windows-10-step-by-step-guide#comment314

Run the following command in an elevated Command Prompt window (Run as administrator) to start YARN daemons:

%HADOOP_HOME%\sbin\start-yarn.cmdSimilarly two Command Prompt windows will open: one for resource manager and another for node manager as the following screenshot shows:

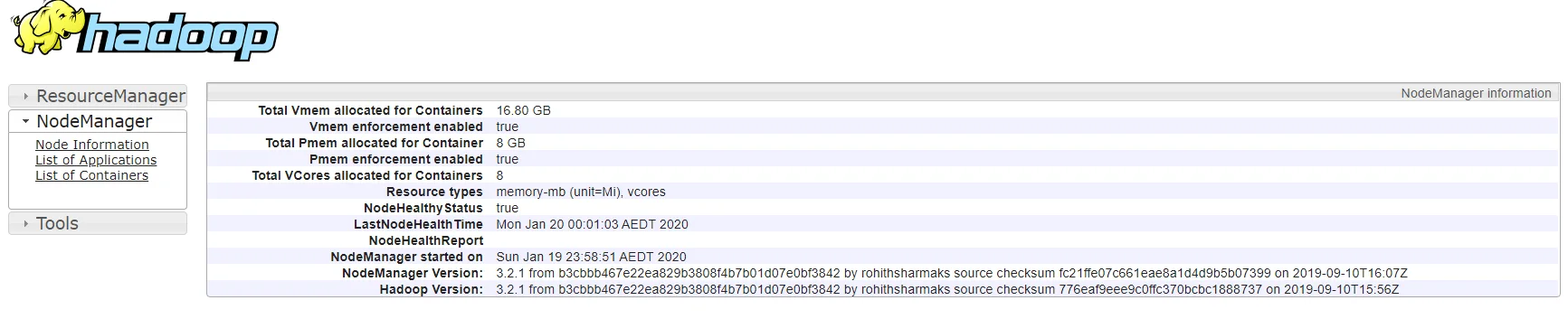

Step 10 - Useful Web portals exploration

The daemons also host websites that provide useful information about the cluster.

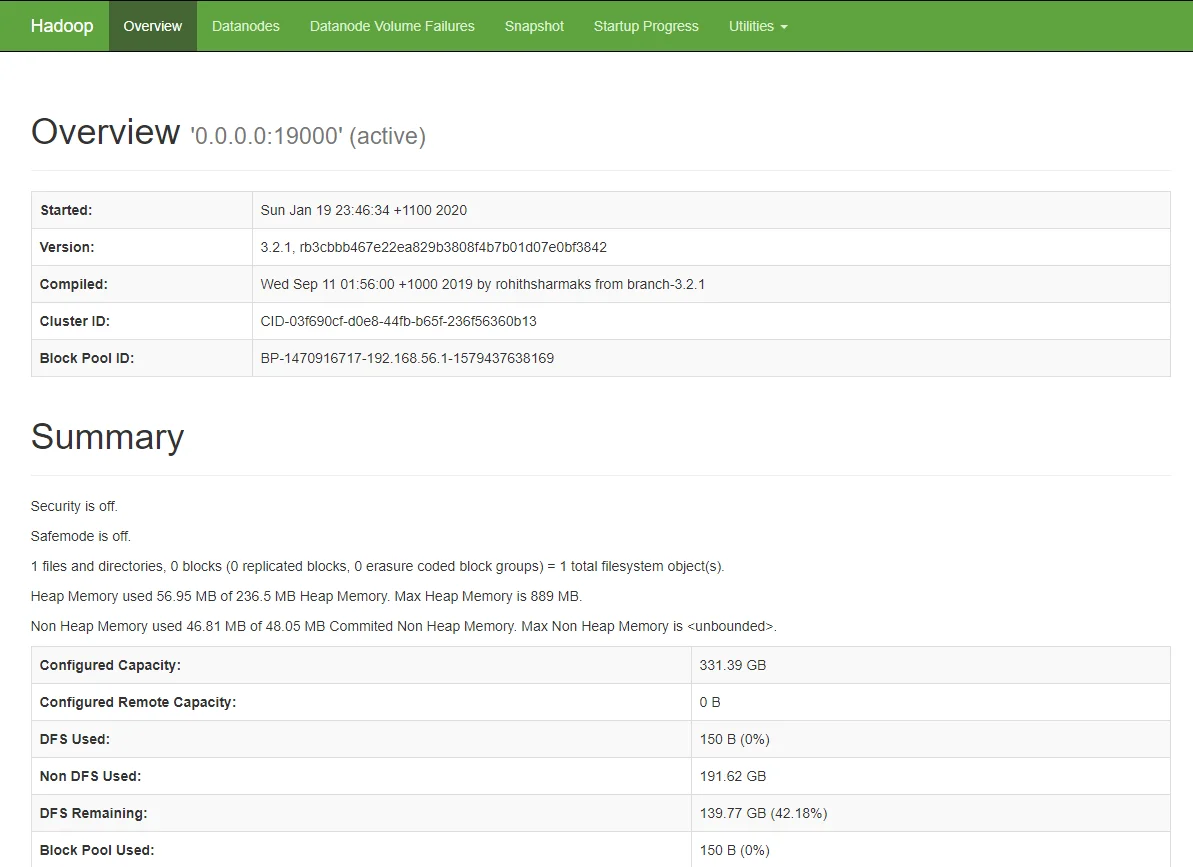

HDFS Namenode information UI

http://localhost:9870/dfshealth.html#tab-overview

The website looks like the following screenshot:

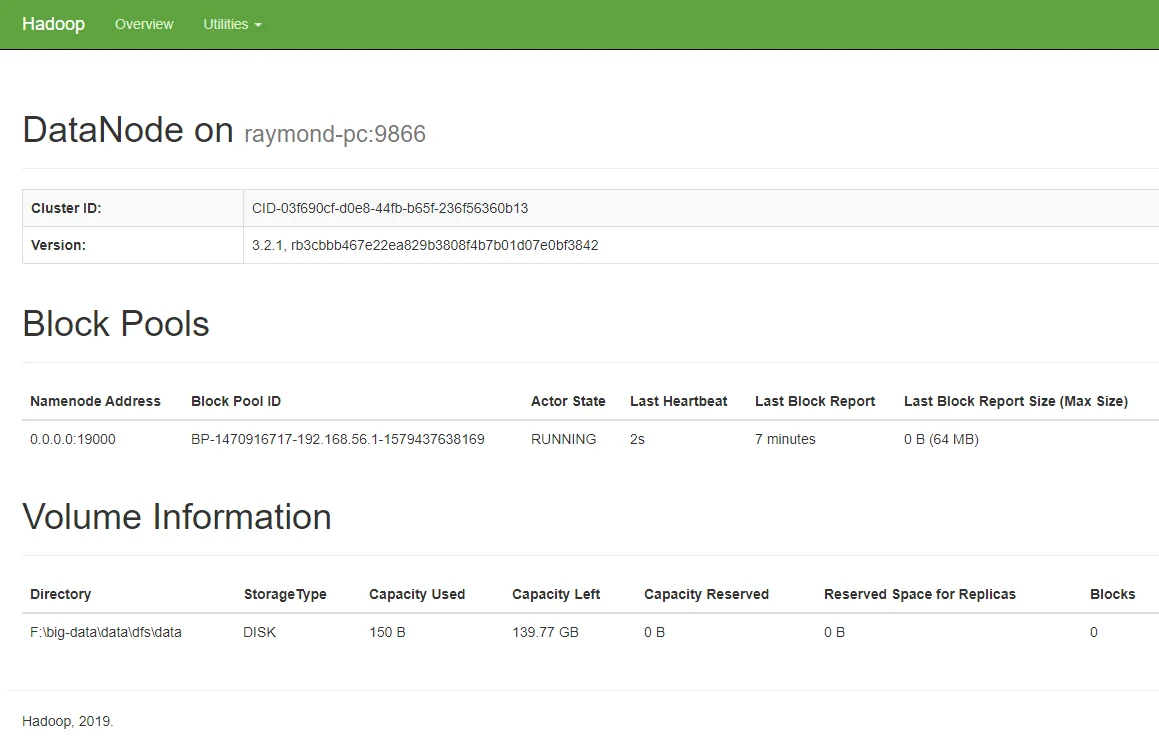

HDFS Datanode information UI

The website looks like the following screenshot:

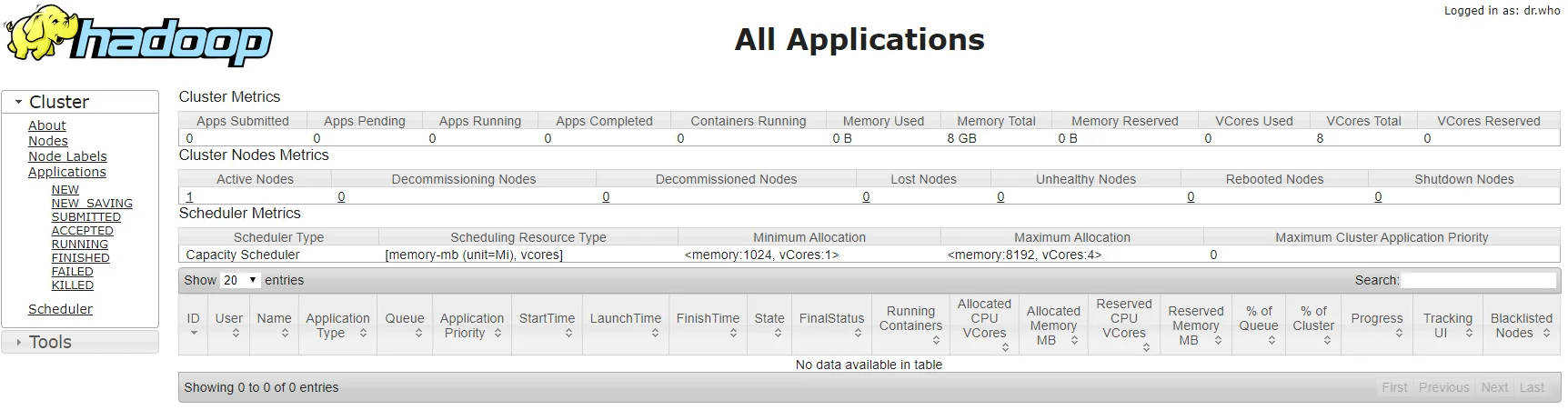

YARN resource manager UI

The website looks like the following screenshot:

Through Resource Manager, you can also navigate to any Node Manager:

Step 11 - Shutdown YARN & HDFS daemons

You don't need to keep the services running all the time. You can stop them by running the following commands one by one:

%HADOOP_HOME%\sbin\stop-yarn.cmd

%HADOOP_HOME%\sbin\stop-dfs.cmd

check Congratulations! You've successfully completed the installation of Hadoop 3.2.1 on Windows 10.

Let me know if you encounter any issues. Enjoy with your latest Hadoop on Windows 10.