Jupyter notebook service can be started in most of operating system. In the system where Hadoop clients are available, you can also easily ingest data into HDFS (Hadoop Distributed File System) using HDFS CLIs.

*Python 3 Kernel is used in the following examples.

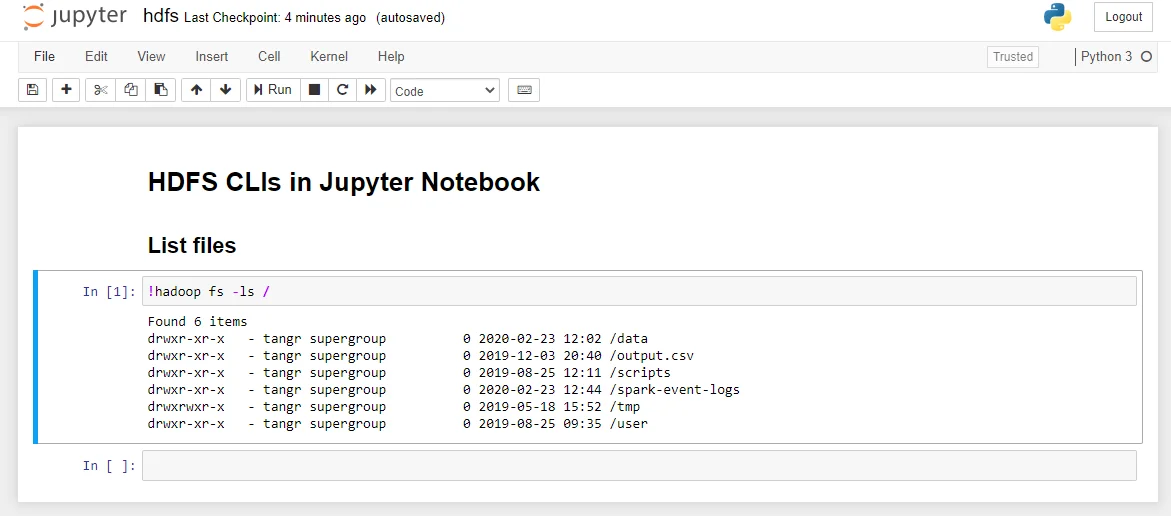

List files in HDFS

The following command shows how to list files in HDFS.

!hadoop fs -ls /

Output

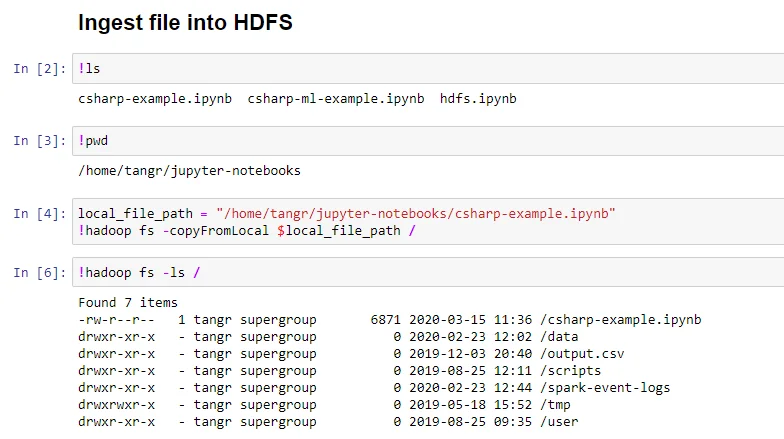

Ingest file into HDFS

HDFS copyFromLocal option can be used to copy file from local to HDFS. You can also use Python variables in the commands.

local_file_path = "/home/tangr/jupyter-notebooks/csharp-example.ipynb"

!hadoop fs -copyFromLocal $local_file_path /

Output

As shown in the following screenshot, a local file named csharp-example.ipynb was ingested into HDFS root folder: **/**csharp-example.ipynb.

Other HDFS commands

You can also use other commands in Jupyter notebook. For example, download HDFS file into local storage and then parse or read the file using native functions.