In my article Connect to Teradata database through Python, I demonstrated about how to use Teradata python package or Teradata ODBC driver to connect to Teradata. In this article, I’m going to show you how to connect to Teradata through JDBC drivers so that you can load data directly into Spark data frames using Scala as programming language.

infoThe following code snippet runs in spark-shell. You can also create a Scala project and then compile it to JAR file and then use spark-submit to run the application. The code can also run in notebooks like Zeppelin.

Prerequisites

Teradata JDBC driver is required. Please download it from Teradata official website.

Start session with Teradata

First, start Spark session using Spark Shell and pass in your Teradata JDBC driver through --jars argument:

spark-shell --jars F:\lib\terajdbc4.jar -c spark.ui.port=11111

Teradata JDBC driver locates at F:\lib\terajdbc4.jar in my system.

Load data from Teradata

Once the session is started, follow these steps to load data from Teradata.

- Import types

import org.apache.spark.sql._

import org.apache.spark.sql.types._

- Create DataFrame

val jdbcDF = (spark.read.format("jdbc")

.option("url", "jdbc:teradata://192.168.119.128/Database=dbc,LOGMECH=td2")

.option("dbtable", "(sel * from TestDb.test_table) as src")

.option("user", "dbc")

.option("password", "dbc")

.option("driver", "com.teradata.jdbc.TeraDriver")

.load()

)

- Show the result

scala> jdbcDF.show()

+---+--------+------+

| id|category|amount|

+---+--------+------+

| 5| B| 101|

| 5| C| 1001|

| 3| A| 12|

| 1| A| 10|

| 6| B| 102|

| 6| C| 1002|

| 4| B| 100|

| 4| C| 1000|

| 2| A| 11|

+---+--------+------+

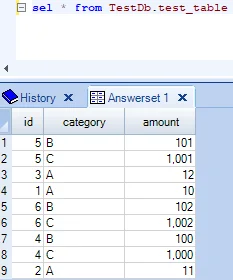

The result is consistent with directly querying data via SQL Assistant:

Some details about the code snippets

In the above example, JDBC connection string is configured to use TD2as login mechanism. You can also change it to LDAPso that you can use credential in your active directory to logon to the database.

Depends on the version of your Spark, you may be able to directly use query parameter to pass in your SQL query instead of dbtable. query and dbtable parameters cannot be specified at the same time. In lower version of Spark, you can pass in your SQL as a subquery as I did in the above examples.

Run the code in a cluster

If you are going to run the code in a cluster or workflow tools like Oozie, you can copy these JAR files into HDFS and then pass in the library path or jars arguments as HDFS file paths. In this way, all the workloads can load Teradata JDBC drivers successfully.

SQL Server, Oracle, MySQL…

You can also use similar approach to connect to your SQL Server, Oracle, MySQL or any other JDBC supported databases.

Have fun!