Spark is written with Scala which runs in JVM (Java Virtual Machine); thus it is also feasible to run Spark in a macOS system. This article provides step by step guide to install the latest version of Apache Spark 3.0.1 on macOS. The version I'm using is macOS Big Sur version 11.1.

Prerequisites

Hadoop 3.3.0

This article will use Spark package without pre-built Hadoop. Thus we need to ensure a Hadoop environment is setup first.

If you choose to download Spark package with pre-built Hadoop, Hadoop 3.3.0 configuration is not required.

Follow article Install Hadoop 3.3.0 on macOS to configure Hadoop 3.3.0 on macOS.

JDK 11

Spark 3.0.1 can run on Java 8 or 11. This tutorial uses Java 11. Follow article Install JDK 11 on macOS to install Java 11.

Download binary package

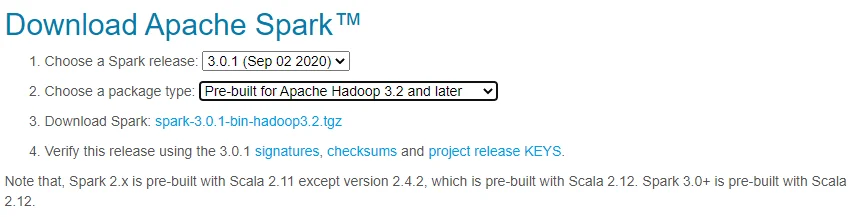

Visit Downloads page on Spark website to find the download URL.

For me, the closest location is: https://downloads.apache.org/spark/spark-3.0.1/spark-3.0.1-bin-hadoop3.2.tgz.

Download the binary package to a folder. In my system, the file is saved to this folder: ~/Downloads/spark-3.0.1-bin-hadoop3.2.tgz.

Unpack the binary package

Unpack the package using the following command:

mkdir ~/hadoop/spark-3.0.1 tar -xvzf ~/Downloads/spark-3.0.1-bin-hadoop3.2.tgz -C ~/hadoop/spark-3.0.1 --strip 1

The Spark binaries are unzipped to folder ~/hadoop/spark-3.0.1.

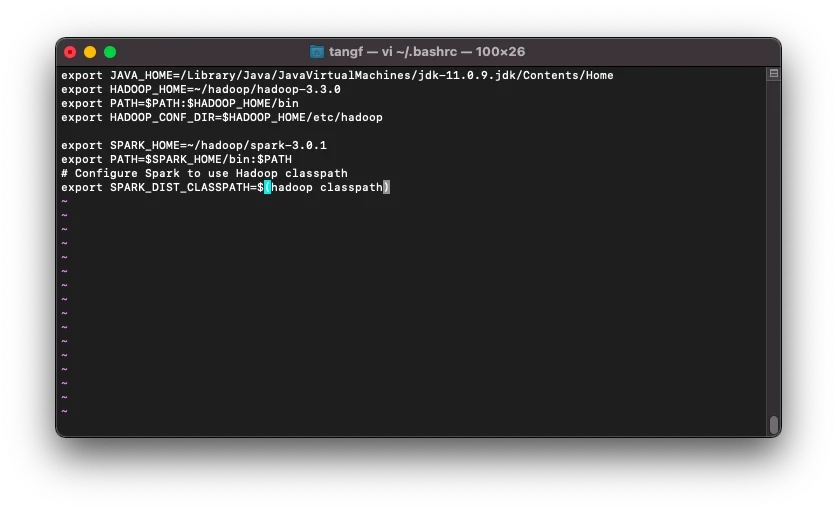

Setup environment variables

Setup SPARK_HOME environment variables and also add the bin subfolder into PATH variable. We also need to configure Spark environment variable SPARK_DIST_CLASSPATH to use Hadoop Java class path.

Run the following command to change .bashrc file:

vi ~/.bashrc

Add the following lines to the end of the file:

export SPARK_HOME=~/hadoop/spark-3.0.1 export PATH=$SPARK_HOME/bin:$PATH # Configure Spark to use Hadoop classpath export SPARK_DIST_CLASSPATH=$(hadoop classpath)

Load the updated file using the following command:

# Source the modified file to make it effective:source ~/.bashrc

If you also have Hive installed, change SPARK_DIST_CLASSPATH to:

export SPARK_DIST_CLASSPATH=$(hadoop classpath):$HIVE_HOME/lib/*

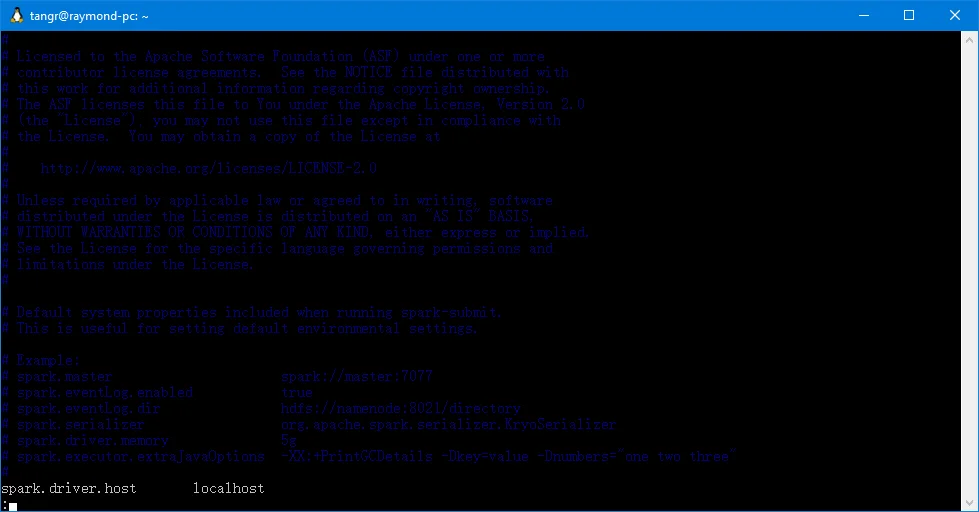

Setup Spark default configurations

Run the following command to create a Spark default config file:

cp $SPARK_HOME/conf/spark-defaults.conf.template $SPARK_HOME/conf/spark-defaults.conf

Edit the file to add some configurations use the following commands:

vi $SPARK_HOME/conf/spark-defaults.conf

Make sure you add the following line:

spark.driver.host localhost

# Enable the following one if you have Hive installed.

# spark.sql.warehouse.dir /user/hive/warehouse

There are many other configurations you can do. Please configure them as necessary.

spark.eventLog.dir and spark.history.fs.logDirectory

These two configurations can be the same or different. The first configuration is used to write event logs when Spark application runs while the second directory is used by the historical server to read event logs. You can configure these two items accordingly.

Now let's do some verifications to ensure it is working.

Run Spark interactive shell

Run the following command to start Spark shell:

spark-shell

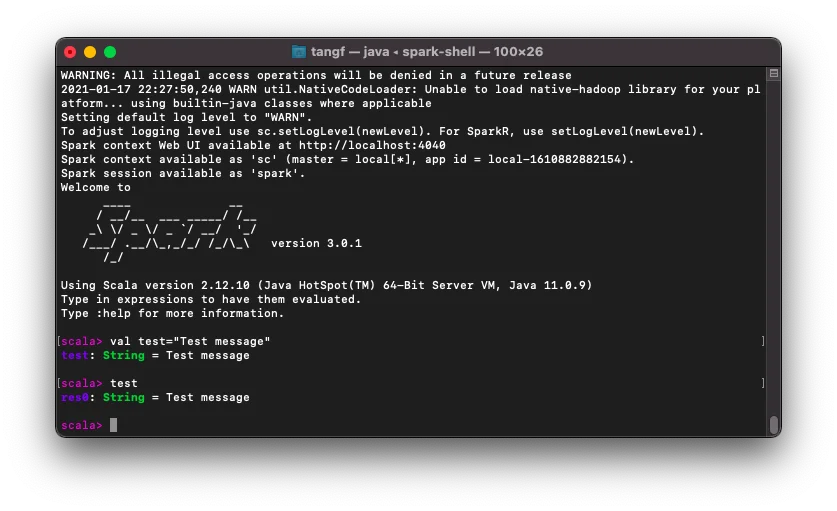

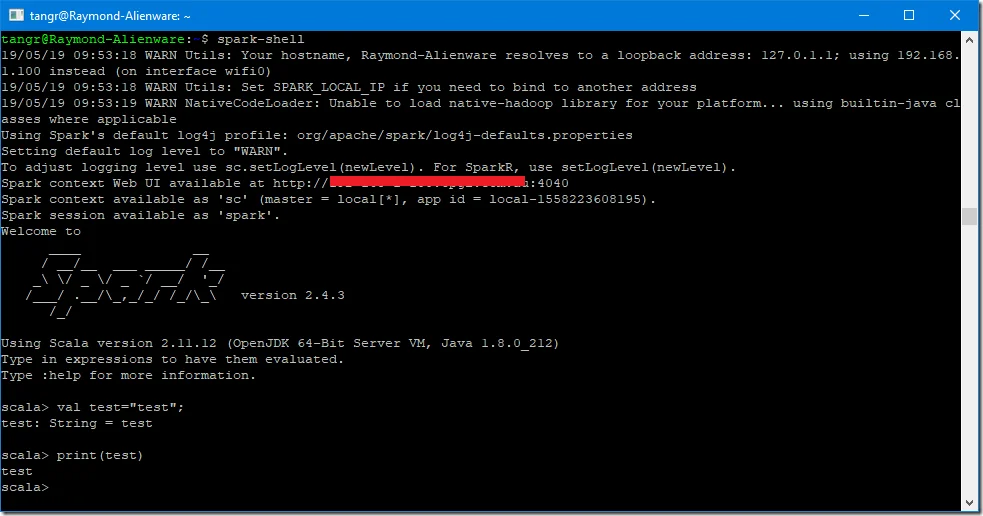

The interface looks like the following screenshot:

By default, Spark master is set as local[*] in the shell.

Run command :quit to exit Spark shell.

Run with built-in examples

Run Spark Pi example via the following command:

run-example SparkPi 10

The output looks like the following:

...

Pi is roughly 3.1413231413231415

...

In this website, I’ve provided many Spark examples. You can practice following those guides.

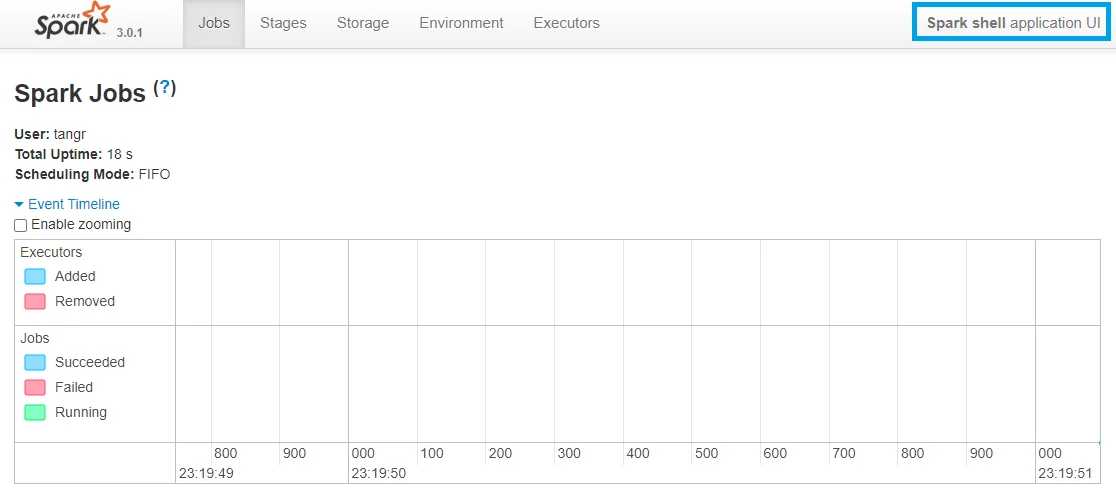

Spark context Web UI

When a Spark session is running, you can view the details through UI portal. As printed out in the interactive session window, Spark context Web UI available at http://localhost:4040. The URL is based on the Spark default configurations. The port number can change if the default port is used. Refer to Fix - ERROR SparkUI: Failed to bind SparkUI for more details.

The following is a screenshot of the UI:

Enable Hive support

If you’ve configured Hive in WSL, follow the steps below to enable Hive support in Spark.

Copy the Hadoop core-site.xml and hdfs-site.xml and Hive hive-site.xml configuration files into Spark configuration folder:

cp $HADOOP_HOME/etc/hadoop/core-site.xml $SPARK_HOME/conf/cp $HADOOP_HOME/etc/hadoop/hdfs-site.xml $SPARK_HOME/conf/cp $HIVE_HOME/conf/hive-site.xml $SPARK_HOME/conf/

And then you can run Spark with Hive support (enableHiveSupport function):

from pyspark.sql import SparkSessionappName = "PySpark Hive Example" master = "local[*]" spark = SparkSession.builder \ .appName(appName) \ .master(master) \ .enableHiveSupport() \ .getOrCreate()# Read data using Spark df = spark.sql("show databases") df.show()

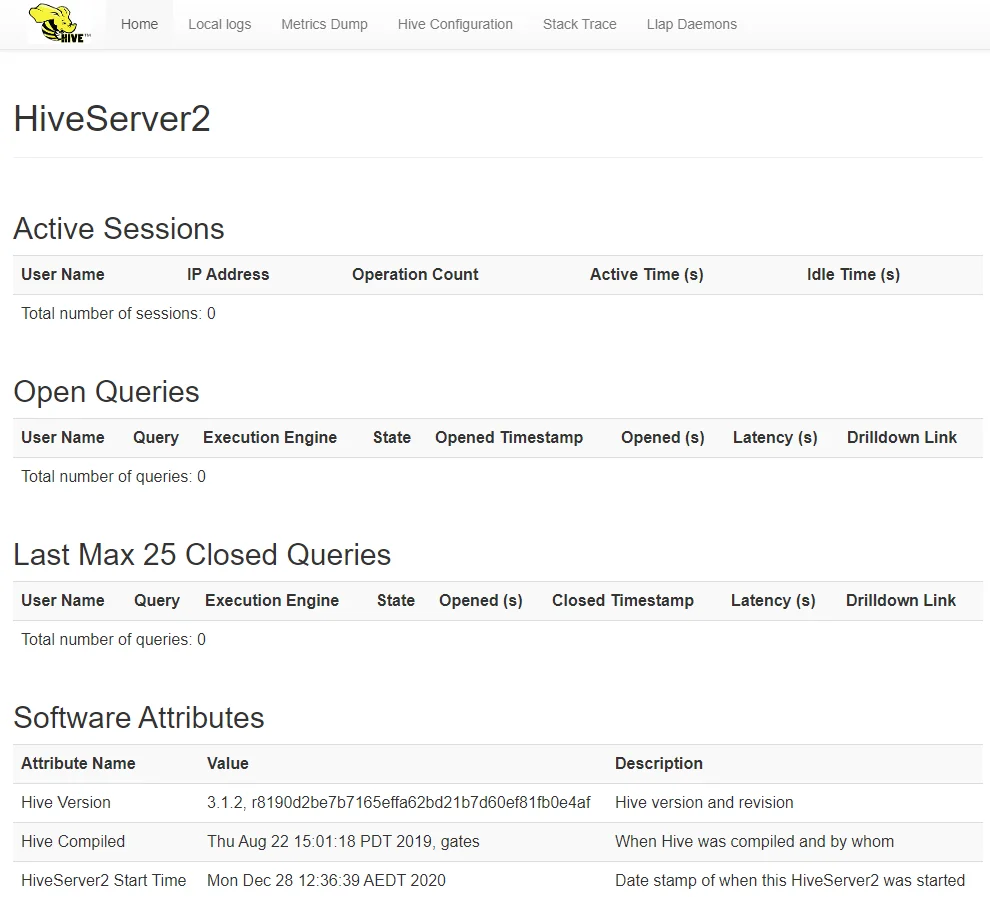

spark-sql CLI

Make sure HiveServer2 service is running before starting this command. You should be able to see HiveServer2 web portal if the service is up.

infoYou can directly use hive or beeline CLI to interact with Hive databases instead of using spark-sql CLI.

Spark history server

Run the following command to start Spark history server:

$SPARK_HOME/sbin/start-history-server.sh

Open the history server UI (by default: http://localhost:18080/) in browser, you should be able to view all the jobs submitted.

check Congratulations! You have successfully configured Spark on macOS. Have fun with Spark 3.0.1.