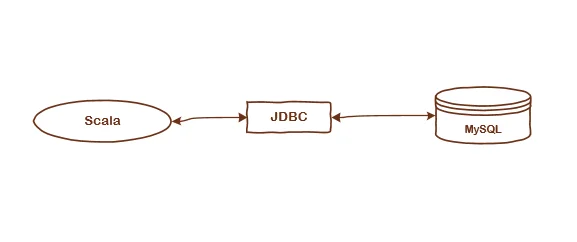

In article Connect to MySQL in Spark (PySpark), I showed how to connect to MySQL in PySpark. In this article, I will directly use JDBC driver to load data from MySQL database with Scala.

Download JDBC driver

Download JDBC Driver for MySQL from the following website:

Copy the driver into the folder where you are going to run the Scala scripts. For this demo, the driver path is ‘*F:\lib* mysql-connector-java-8.0.23\mysql-connector-java-8.0.23.jar’.

infoThe following example uses spark-shell to use JDBC driver of MySQL. You can directly invoke it in a Scala project.

Connect to MySQL

Follow these steps to setup Spark session and then read the data via JDBC.

- Added JDBC jars into Java classpath

The sample code runs in Spark Shell. JAR file is specified in spark-shell command. In a Scala script file, JAR files can be added directly when creating Spark session.

spark-shell --jars "F:\lib\mysql-connector-java-8.0.23\mysql-connector-java-8.0.23.jar" -c spark.ui.port=11111

Location 'F:\lib\mysql-connector-java-8.0.23' is where MySQL JDBC driver is stored in my system.

- Setup database connection variables

val database = "test_db"

val table = "test_table"

val user = "hive"

val password = "hive"

val connString = "jdbc:mysql://localhost:10101/"+database

warning Port number is specified as 10101 since it is not the default mysqld port number 3306.

- Load data via JDBC

val jdbcDF = (spark.read.format("jdbc")

.option("url", connString)

.option("dbtable", table)

.option("user", user)

.option("password", password)

.option("driver", "com.mysql.cj.jdbc.Driver")

.load())

The above code snippet reads data from table test_tablein database test_dbin a local MySQL instance.

infoDepends on the JDBC driver version, the driver class name can be different. For example, in earlier version, the class name is com.mysql.jdbc.Driver instead of com.mysql.cj.jdbc.Driver.

- Show the DataFrame

scala> jdbcDF.show()

The output looks like the following:

+---+--------+

| id| value|

+---+--------+

| 0|Record 0|

| 1|Record 1|

| 2|Record 2|

| 3|Record 3|

| 4|Record 4|

+---+--------+

Let me know if you encounter any issues if you have any questions.