Serial: An Introduction to SQL Server Features

Case Scenario

For this ETL project, the requirements are listed below:

- Sales data will be pushed to specified shared folder regularly.

- Data is stored in CSV files with columns: Sale Number, Product Name, Product Color, Sale Amount, Sale Area and date.

- Minions of sales records are stored in each file.

- The flat files need to be archived after processing.

- The process can be scheduled to run automatically and periodically.

Preparation

In order to create dummy sales files, we can use C# to create one console application to generate them randomly.

class Program

{

static int SaleNo = 10000000;

static void Main(string[] args)

{

var folder = @"E:\Temp\Archive";

var fileCount = 10;

var saleCountInEachFile = 100000;

for (int i = 0; i < fileCount; i++)

{

var fileName = Path.Combine(folder, string.Format("Sales_{0}.csv", i));

//if (!File.Exists(fileName))

//{

// File.CreateText(fileName);

//}

using (var writer = new StreamWriter(fileName, false, Encoding.UTF8))

{

writer.WriteLine(string.Format("{0},{1},{2},{3},{4},{5}", "SaleNo", "ProductName", "ProductColor", "Amount", "AreaName", "SaleDate"));

for (int j = 0; j < saleCountInEachFile; j++)

{

writer.WriteLine(string.Format("{0},{1},{2},{3},{4},{5}", GetSaleNo(), GetRandomProduct(), GetRandomColor(), GetRandomPrice(), GetRandomArea(), GetRandomDay()));

}

}

Console.WriteLine("{0} is generated.", fileName);

}

Console.ReadKey();

}

static int GetSaleNo()

{

return SaleNo++;

}

static string GetRandomColor()

{

var colors = new string[] { "R", "Y" };

Random gen = new Random();

return colors[gen.Next(1000) % colors.Length];

}

static string GetRandomArea()

{

var areas = new string[] { "Earth", "Mars", "Saturn" };

Random gen = new Random();

return areas[gen.Next(1000) % areas.Length];

}

static int GetRandomPrice()

{

var minPrice = 888;

var maxPrice = 5999;

return new Random().Next(minPrice, maxPrice);

}

static string GetRandomProduct()

{

var products = new string[] { "myPhone", "yourPhone", "ourPhone" };

Random gen = new Random();

return products[gen.Next(1000) % products.Length];

}

static DateTime GetRandomDay()

{

DateTime start = new DateTime(2011, 1, 1);

Random gen = new Random();

int range = (DateTime.Today - start).Days;

return start.AddDays(gen.Next(range));

}

}

Run the program and 10 CSV files will be created.

Now move these files into parent folder. In next step, we are going to create one SSIS package to load these files into table dbo.Sales and move them to Archive folder.

Create SSIS Package to Load Files

SSIS (SQL Server Integration Service) provides components/tasks to implement complex ETL projects. The following steps will illustrate how to use it build package rapidly.

Create SSIS Project ‘ETL-Sample’

Add package ‘Package-HiSqlServer-LoadSales.dtsx’

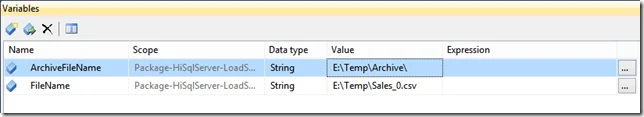

Add two variables

- Create one connection manager ‘RAYMOND-PC\MSSQL2012.HiSqlServer’ connecting to the database using SQL account.

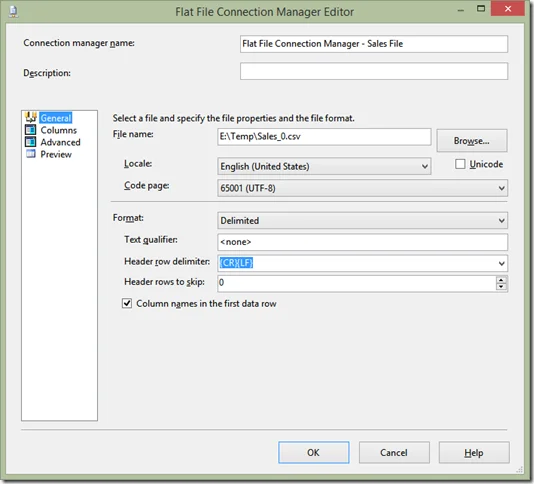

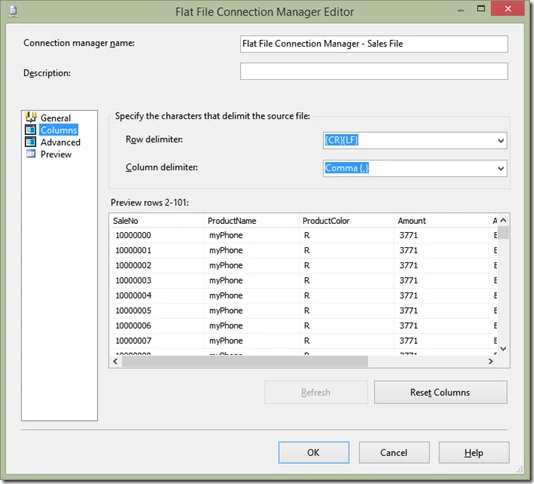

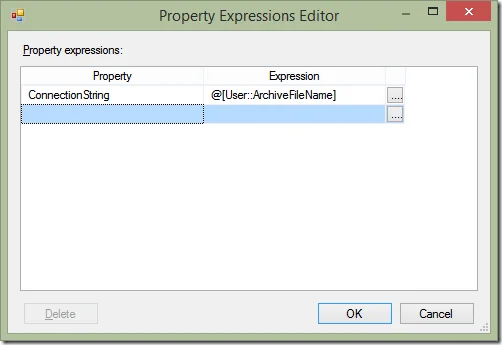

- Create another flat file connection manager ‘Flat File Connection Manager - Sales File’ connecting to one of the sales file we created. Use expression to bind the connection to variable FileName. Settings are as following:

- Create Flat File Connection Manager ‘Sales_0.csv’.

Set the file path using expression:

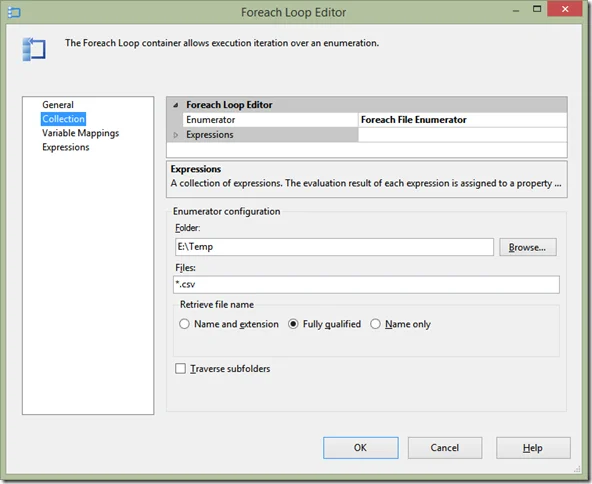

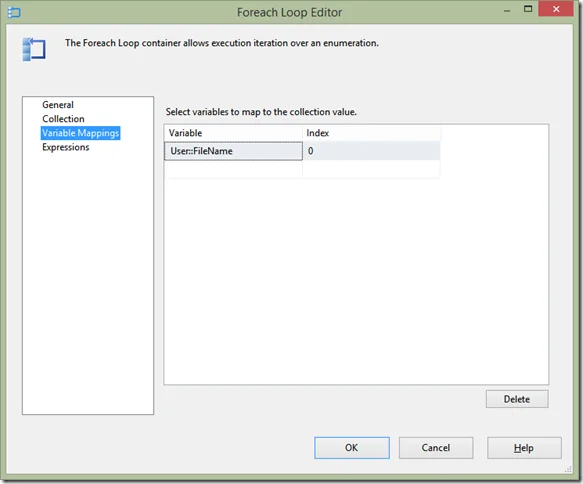

- Create Foreach Loop Container ‘Foreach Loop Container - Sales File’ to process each file one bye one. The settings are listed below:

(* Set the variable FileName with value from the file path of the current loop)

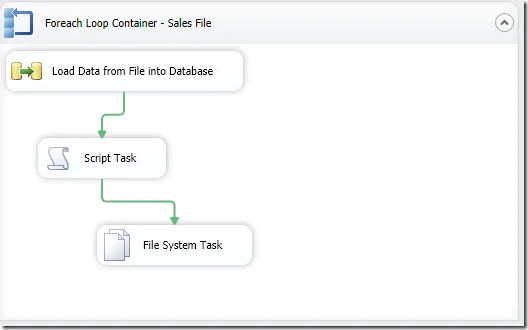

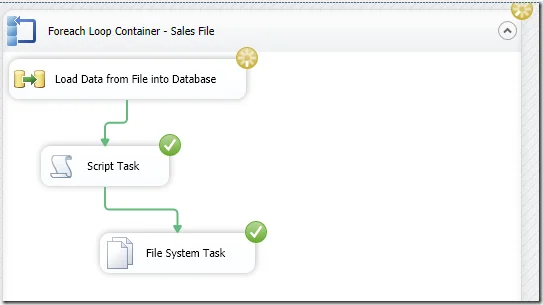

- Create tasks in the container to import data, set variable values and move file to archive folder once processed.

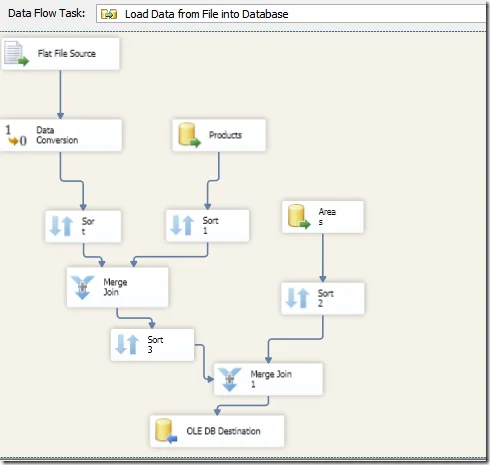

The data flow task is going to load data from the file source (Foreach Loop Container - Sales File) and then data is typed and transformed to join dbo.Areas and dbo.Products in database ‘HiSqlServer-Sample’ through connection ‘RAYMOND-PC\MSSQL2012.HiSqlServer’. Finally, data is loaded into the OLE DB destination (table dbo.Sales).

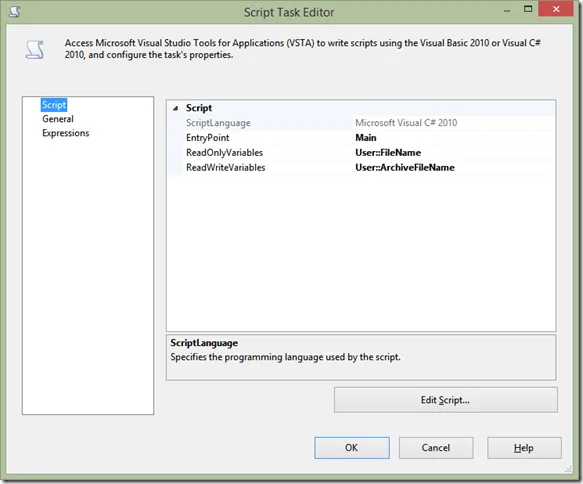

The script task changes the variable value for the following step.

The scripts are:

public void Main()

{

// TODO: Add your code here

var filepath = Dts.Variables["User::FileName"].Value.ToString();

var dir = Path.GetDirectoryName(filepath);

var newPath = Path.Combine(dir, "Archive");

Dts.Variables["User::ArchiveFileName"].Value = newPath;

Dts.TaskResult = (int)ScriptResults.Success;

}

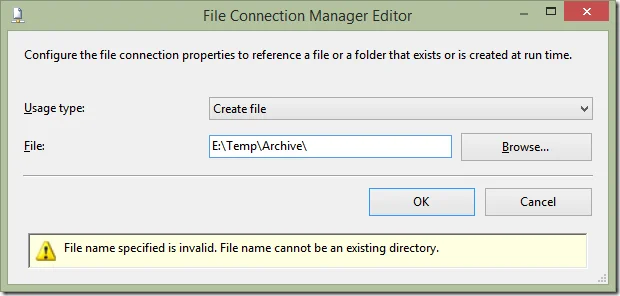

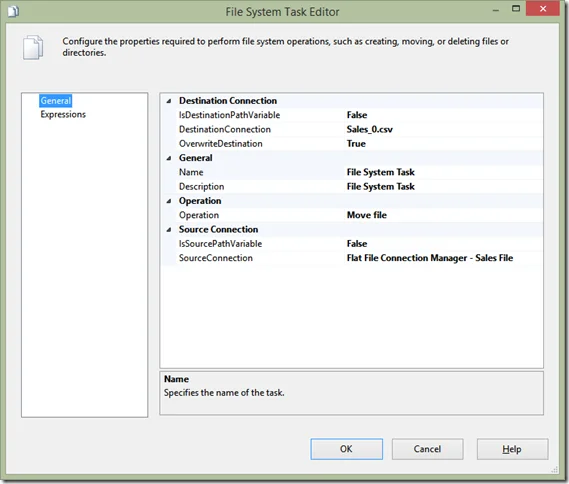

The final task is a File System Task, which moves the processed file in the loop to the archive folder. Use ‘Sales_0.csv’ as destination connection. The file path of this connection is already changed by the previous script task.

- Execute the package and all the data will be loaded into the database.

(* Files are being loaded one by one.)

Using the following query, you can find 1,000,000 records are loaded into the database.

SELECT COUNT(*) FROM dbo.Sales

Schedule

Use SQL Server Agent Job, you can set up schedules for running the above package. Please reference <http://technet.microsoft.com/en-us/library/ms139805(v=SQL.90).aspx>.