This page shows how to create Hive tables with storage file format as Parquet, Orc and Avro via Hive SQL (HQL).

The following examples show you how to create managed tables and similar syntax can be applied to create external tables if Parquet, Orc or Avro format already exist in HDFS.

Create table stored as Parquet

Example:

CREATE TABLE IF NOT EXISTS hql.customer_parquet(cust_id INT, name STRING, created_date DATE)

COMMENT 'A table to store customer records.'

STORED AS PARQUET;

Create table stored as Orc

Example:

CREATE TABLE IF NOT EXISTS hql.customer_orc(cust_id INT, name STRING, created_date DATE)

COMMENT 'A table to store customer records.'

STORED AS ORC;

Create table stored as Avro

Example:

CREATE TABLE IF NOT EXISTS hql.customer_avro(cust_id INT, name STRING, created_date DATE)

COMMENT 'A table to store customer records.'

STORED AS AVRO;

Install Hive database

Follow the article below to install Hive on Windows 10 via WSL if you don't have available available Hive database to practice Hive SQL:

Examples on this page are based on Hive 3.* syntax.

Run query

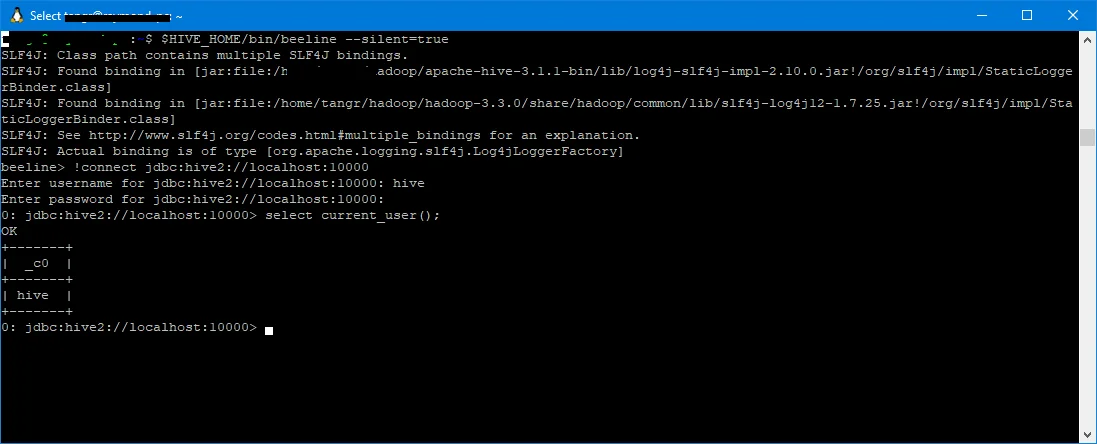

All these SQL statements can be run using beeline CLI:

$HIVE_HOME/bin/beeline --silent=true

The above command line connects to the default HiveServer2 service via beeline. Once beeline is loaded, type the following command to connect:

0: jdbc:hive2://localhost:10000> !connect jdbc:hive2://localhost:10000

Enter username for jdbc:hive2://localhost:10000: hive

Enter password for jdbc:hive2://localhost:10000:

1: jdbc:hive2://localhost:10000>

The terminal looks like the following screenshot: