Kontext Data Lake enables secure ingestion, storage, and querying of structured data files within each project. It provides analytics and data exploration with project-level isolation and access control, allowing users to derive insights from their data while maintaining security.

Overview

The Data Lake component supports:

- File Formats: CSV, TSV, Parquet, JSON/JSONL, Excel (.xlsx, .xls), and growing

- Storage: Cloud storages with automatic Parquet optimization

- Query Execution: Run modern SQL queries

- Access Control: Project-based roles and permissions and data access via private endpoints/link only

Key Features

- Automatic Data Ingestion & Profiling: Uploaded files are automatically detected, ingested, and profiled using the serverless job framework. Users can query the data lake immediately after upload—no manual setup required.

- Cloud-Native Storage: Files are stored in Cloud storages.

- SQL Query Engine: Query data directly from cloud storage, with intelligent execution mode selection

- Project Isolation & Security: Each project has its own isolated DuckDB instance with:

- Role-based access control (Reader, Contributor, Owner, Administrator)

- Complete data isolation between projects

- Audit logging for all operations

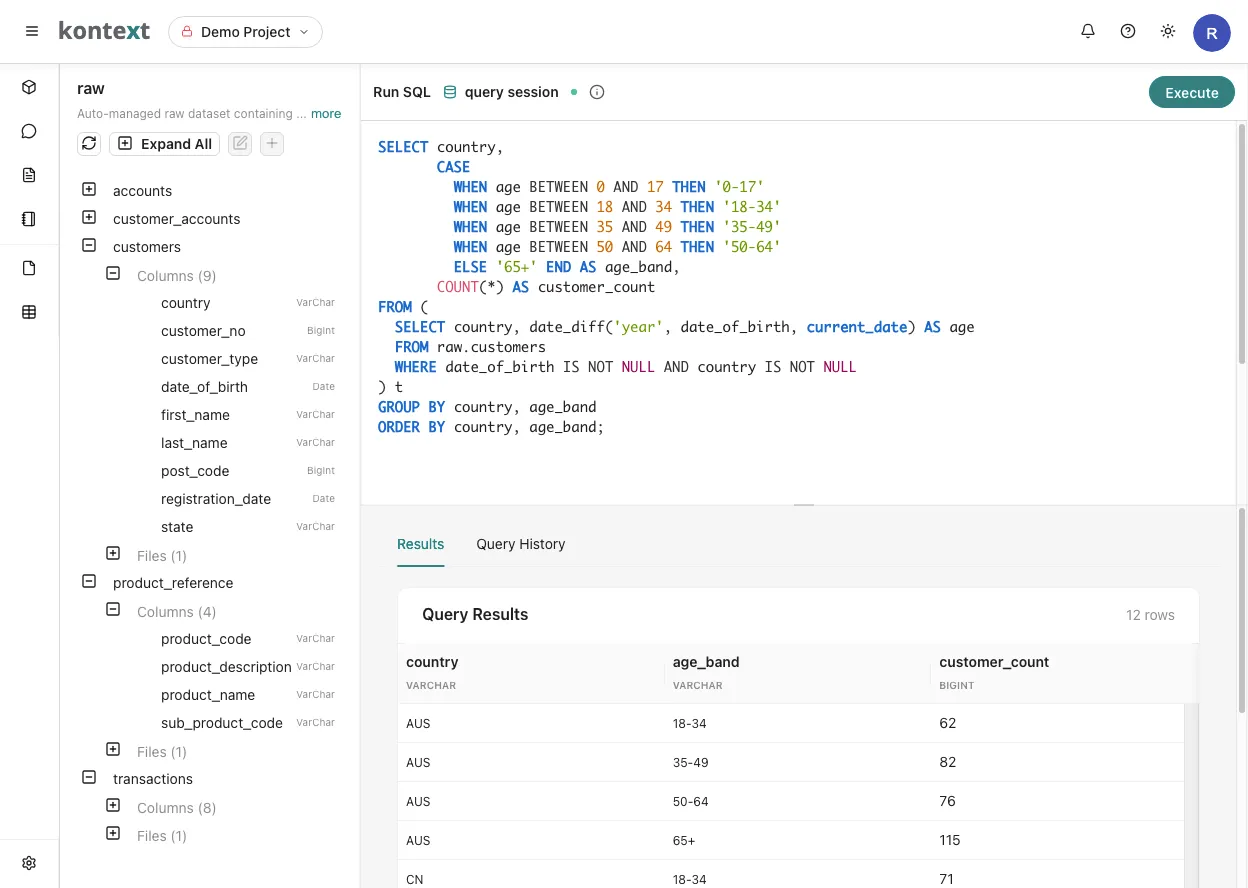

- Data Catalog & Query UI: Rich interface for data exploration:

- Visual schema browser

- SQL query editor with syntax highlighting

- Result visualization and export

- Query history tracking

- Zero-Config Data Processing:

- Format-specific optimizations

- External table registration

- Statistics generation

How It Works

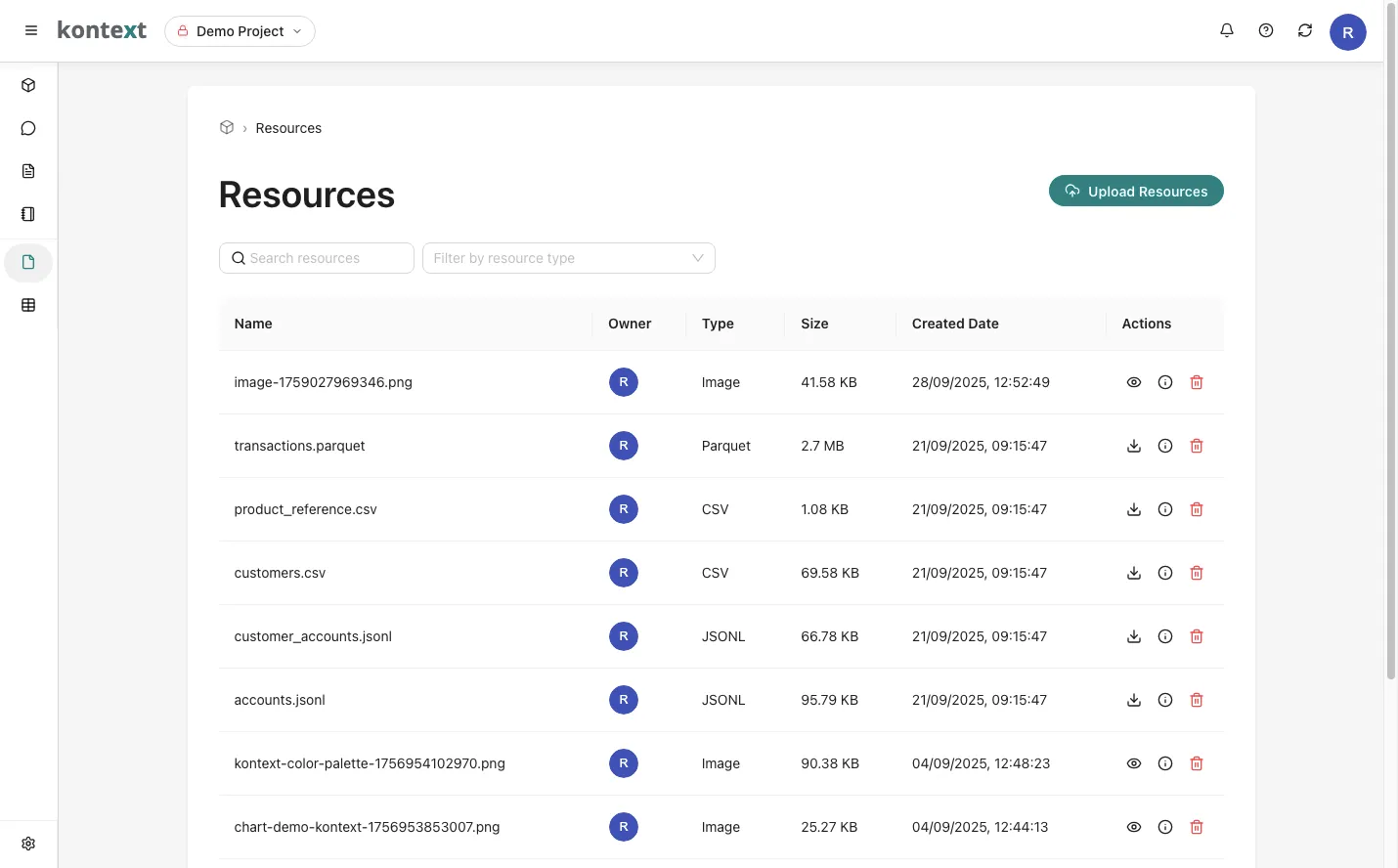

Upload Files

Add semi-structured and/or structured data files to your project through the web interface (Files) or API.

The uploaded data files stay with your other project files like images within the same project.

Automatic Processing

Data file uploads will trigger Kontext jobs to automatically profile your files:

- File detection and format identification

- Schema analysis and profiling

- Parquet optimization (when beneficial)

- Table registration with metadata

The process creates a dataset named raw for each project.

Query Your Data

Once data profiling is completed, you can start to analyze your data with SQL.

- Write SQL queries in the web interface

- Visualize results with built-in charts (coming soon)

- Export data in various formats (coming soon)

- Track query history

Security & Governance

Kontext prioritizes data security and governance as core pillars of the platform, offering a range of features designed to support these objectives:

- Project-level access control

- Query auditing and monitoring

- Resource usage tracking